What is a GPU? Everything you need to know

The GPU is one of the most important components of any PC, as it’s not just required for high-end graphics performance, but also for being able to generate an image, whether it’s your Windows desktop or a 3D world in a video game.

In fact, a GPU isn’t just important for your computer, as even your smartphone, tablet and smartwatch will have some form of GPU.

Whether you’re looking to upgrade your PC, or simply want to learn a little more about your device, this is our comprehensive guide on the GPU, covering why it’s so important and what factors you need to consider before a purchase.

What is a GPU?

A GPU, or a Graphics Processing Unit, is a computer component that can create images. Any device that creates images on a display (such as a laptop, tablet or smartphone) will have a GPU.

Without a GPU, a device would be unable to create any on-screen images, rendering it as useless as a doorstop.

What does a GPU do?

GPUs are responsible for creating images, scenes, and animations on a device, but having a more powerful GPU will accelerate the rendering of 3D graphics, making them useful for many different workloads.

While every smartphone, tablet and laptop will feature a GPU of some form, they’re mostly associated with gaming. That’s because you generally need a powerful GPU to run games at a smooth performance, as the PC component is tasked with creating lots and lots of animations in a matter of milliseconds.

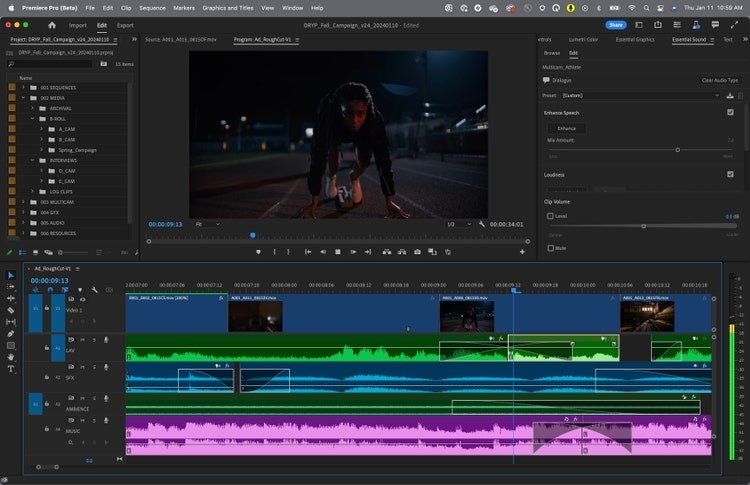

GPUs are required to render multiple complex animations at the same time, which comes in handy not only for gaming but for creatives that are looking to render 3D models or edit video. For example, the MacBook Pro has a more powerful GPU than most laptops since it’s created specially for digital artists and designers.

GPUs are also used in data centres, which enables the likes of cloud gaming, video encoding and video streaming, as well as bitcoin mining.

Essentially, a GPU has a massive influence on everything you see on your screen, and the quality of a GPU is one of the biggest differentiators between the MacBook Pro and MacBook Air.

What is an iGPU?

There are two main types of GPUs, with an iGPU (or Integrated Graphics Processing Unit) being built inside the main processor rather than being a separate chip to the CPU.

These types of GPUs are generally less powerful than discrete GPUs, and are usually developed for basic rendering tasks and generic productivity work, rather than gaming and 3D animation.

That being said, companies such as AMD and Intel are working on improving the performance power of their iGPUs. Apple has also surprised many people with its Apple Silicon chips, particularly the Max and Ultra chips of its M range, which flaunt iGPUs so powerful that they can compete with high-end graphics cards.

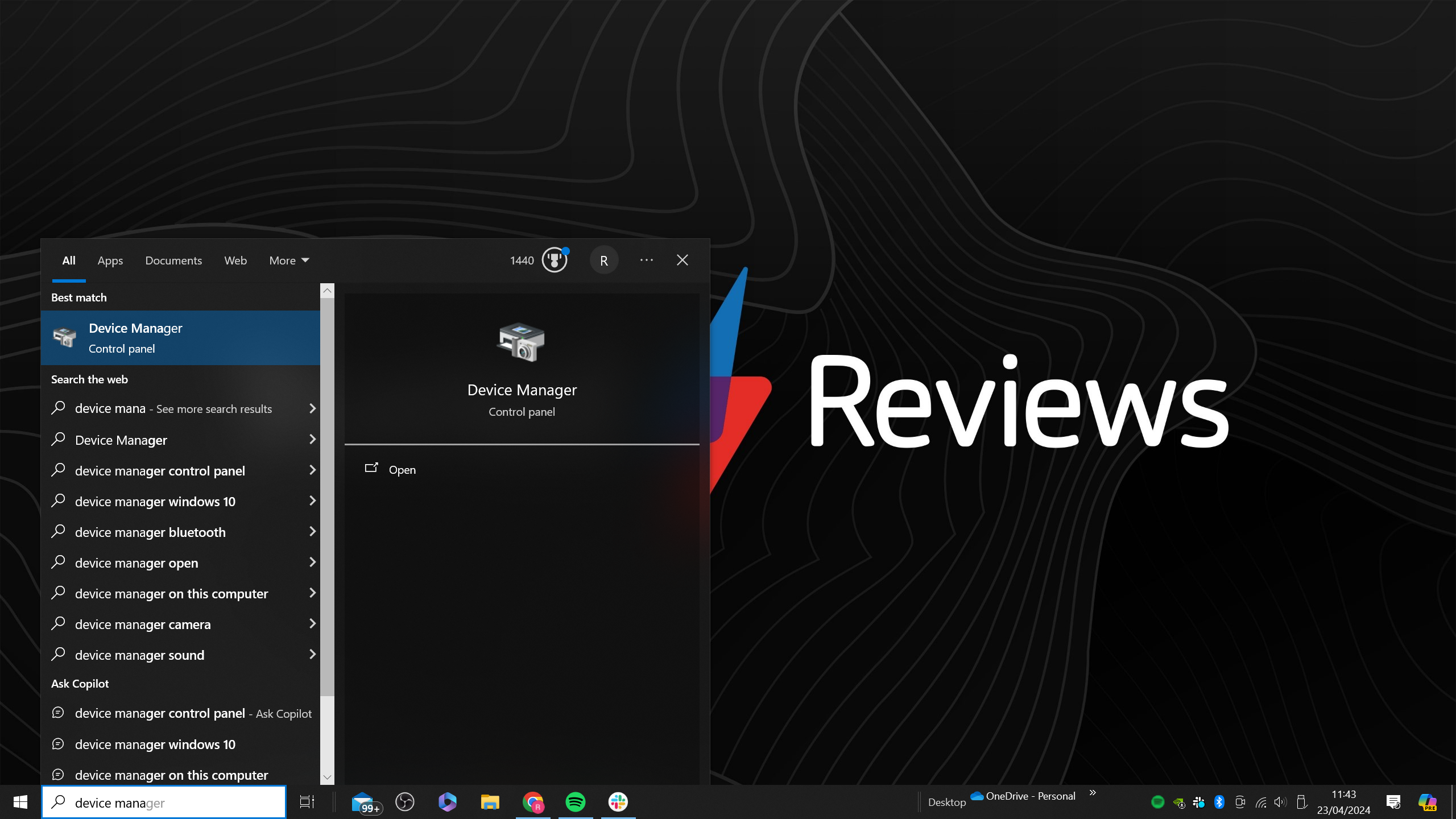

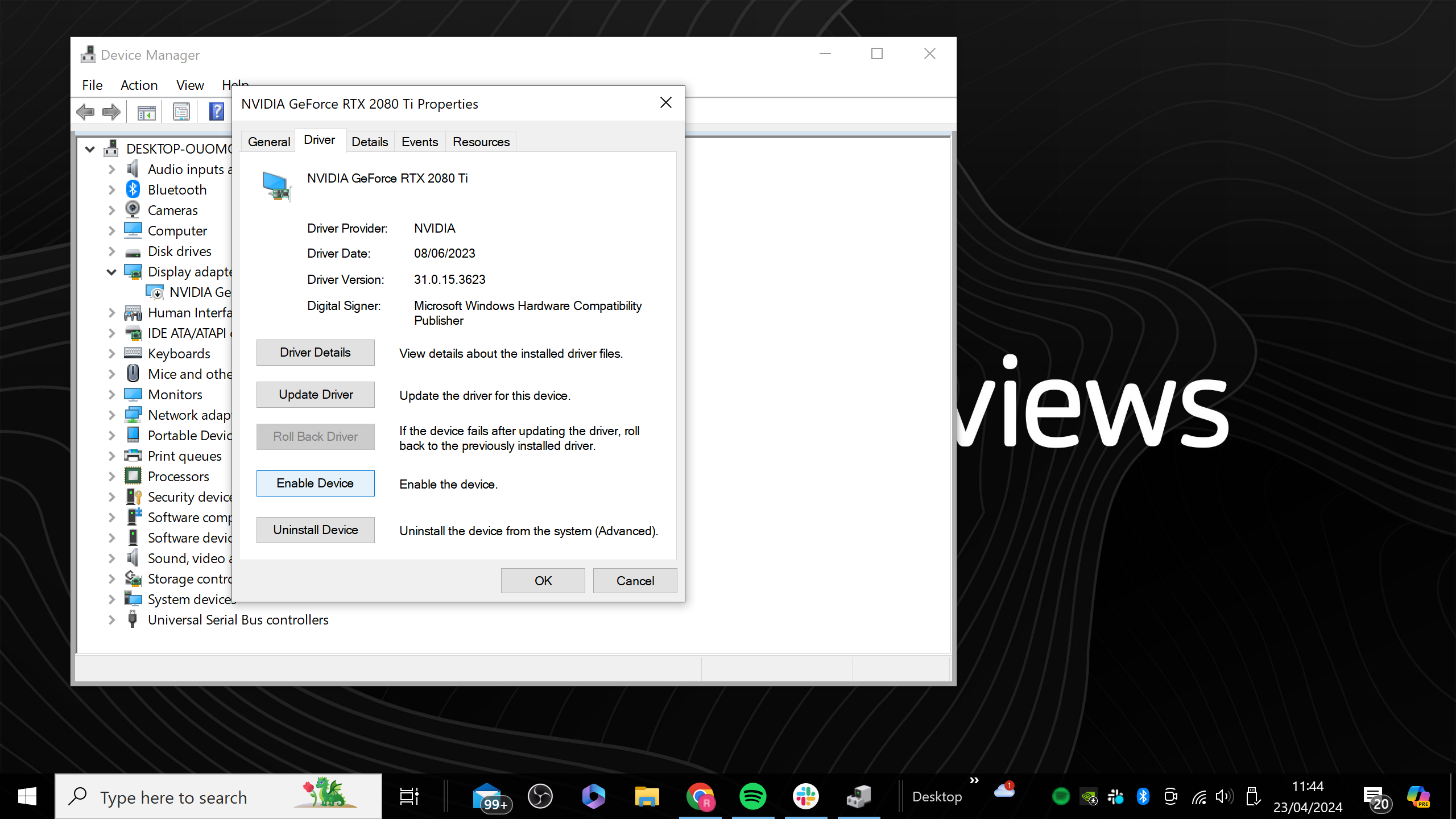

It’s also worth noting that anyone looking to buy a new PC without a dedicated graphics card should check that it features an integrated GPU, as sometimes this isn’t the case. You’ll generally need either an iGPU or dGPU for your PC to run, as otherwise it will be incapable of generating any images.

What is a dGPU?

The second variation is the dGPU, also known as a Discrete Graphics Processing Unit. A dGPU is usually found inside a graphics card for a desktop system, though it can be a dedicated chipset in high-end laptops too.

A discrete GPU is usually a lot more powerful than an iGPU, as it specialises in rendering advanced graphics for the likes of content creation or gaming.

If you’re looking to build a gaming PC, a powerful dGPU is essential for rendering high-quality images and scenes. Right now, the biggest names in the dGPU industry are AMD and Nvidia, although Intel has also launched its own GPUs in the form of Intel Arc.7

Discrete GPUs do come with a catch however, as they require a dedicated cooling system to prevent overheating and to maximise their performance, which is usually why gaming laptops are so much heavier than traditional laptops.

dGPUs also have a higher power consumption, which means that gaming laptops will usually need charging more often than devices that come with an iGPU.

Plus, discrete GPUs can boost the price of a laptop significantly, which is why they’re only usually recommended if you’re going to be gaming or creating content. It’s arguably the most expensive component of a PC.

Is the GPU the same as a graphics card?

While the terms GPU and graphics card are often used interchangeably, they are not the same thing.

A GPU is the chip that is doing all of the heavy lifting, and can be found inside a graphics card.

The graphics card is an ‘expansion card’ that enables the GPU to connect to the motherboard. Graphics cards will also have built-in fans to help cool down the GPU when under stress, as well as their own dedicated RAM.

In essence, the graphics card is the house that a GPU lives in, helping it to connect to the rest of the computer, in a similar relationship to a CPU and a motherboard – the former providing all of the processing power, while the latter sorts out the logistics.

Due to the size of a graphics card, they’re typically only found in desktop PCs. A laptop will instead feature a GPU chip that plugs directly into the motherboard, with each laptop manufacturer developing their own cooling system instead.

Is a GPU better than a CPU?

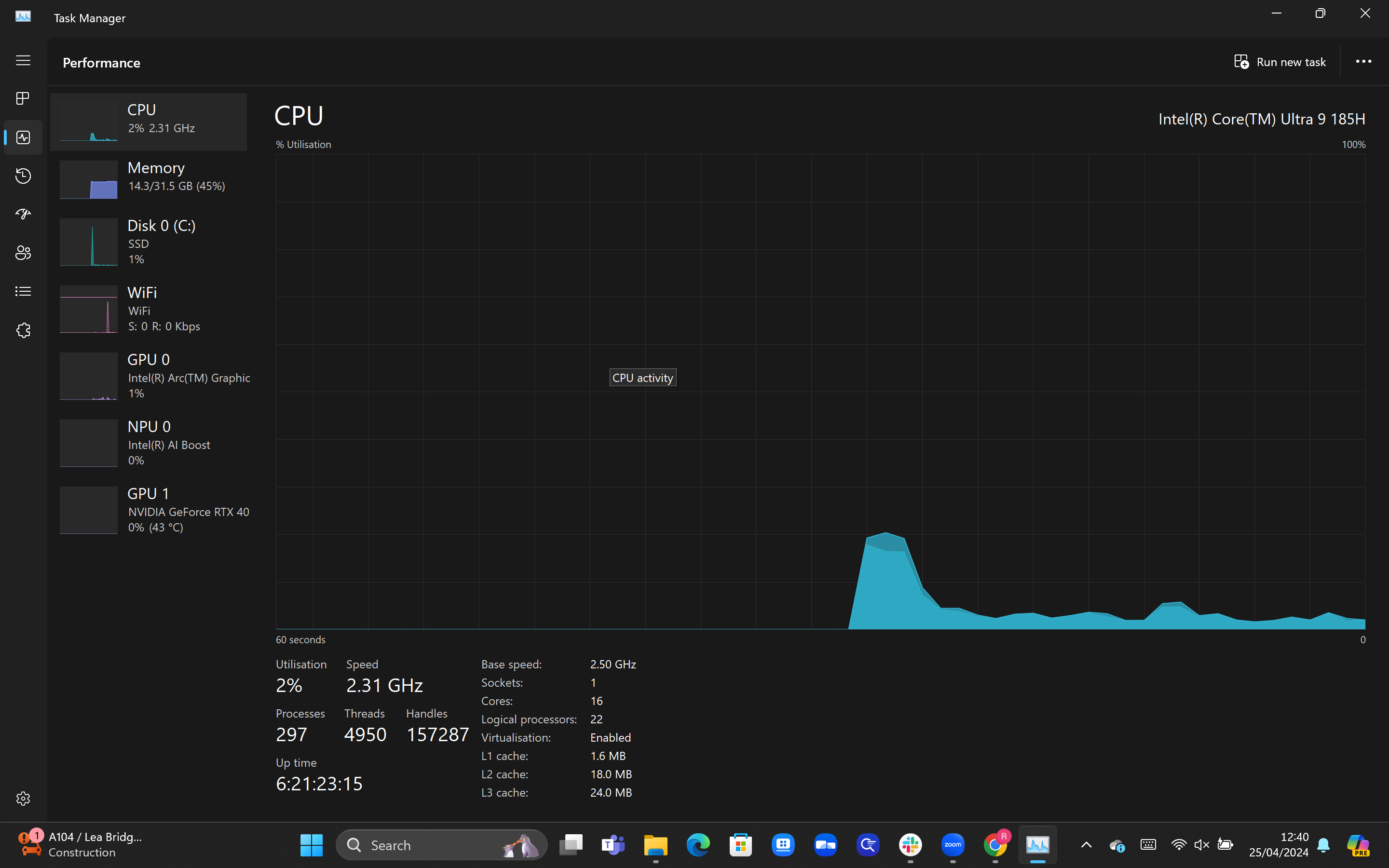

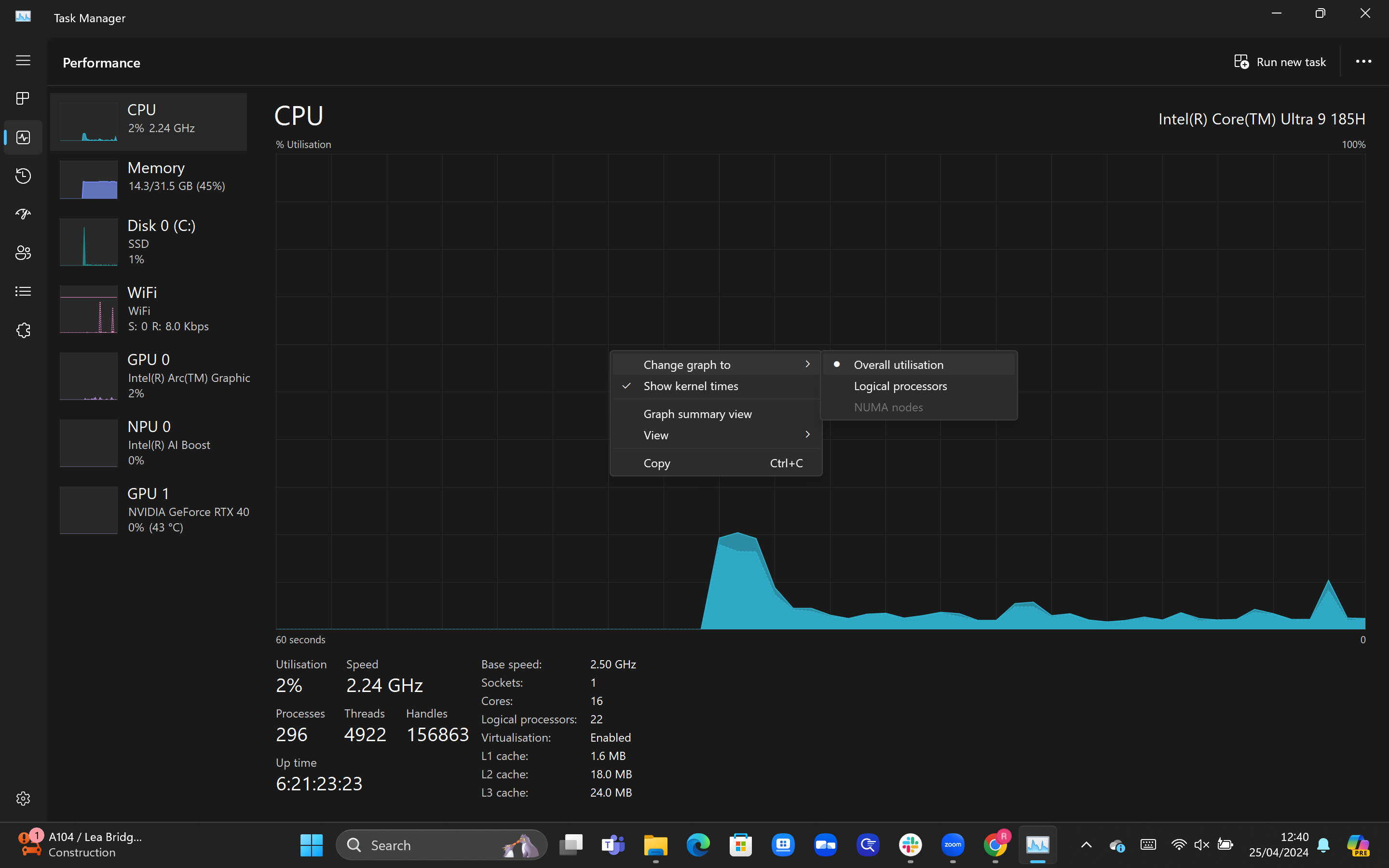

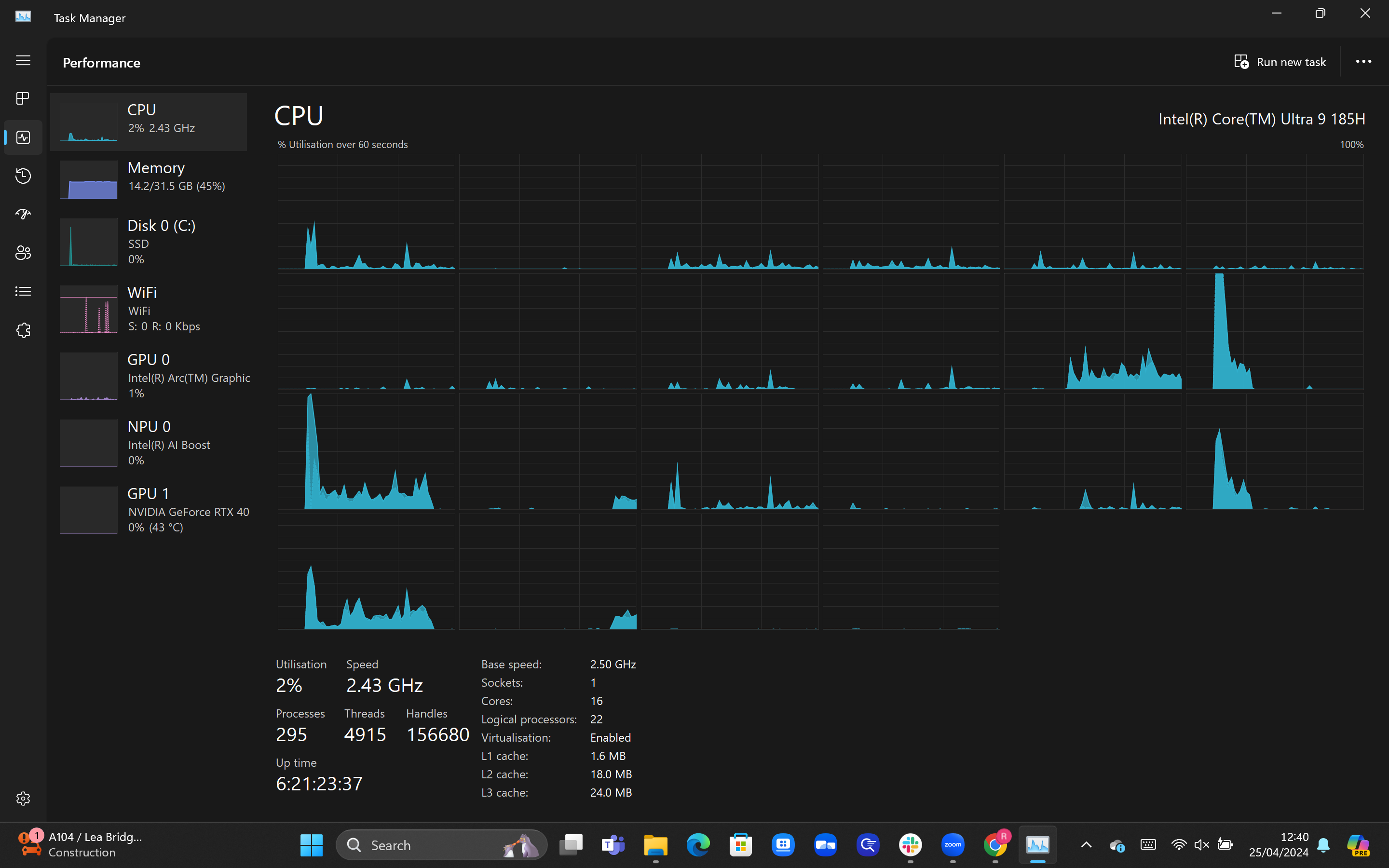

We wouldn’t say that the GPU is better than the CPU or vice versa, as they both perform different jobs. While a GPU is tasked with generating images for a device, the CPU is instead responsible for processing and sending out instructions to all of the other components in an electronic device.

Without a CPU, a GPU wouldn’t be able to perform its job, making both components incredibly important. Both will often be baked into the same chip, especially for small, portable devices such as a smartphone. But even when a CPU and GPU occupy separate chips, they’re still able to communicate with each other effectively.

Depending on your desired workload, you may want to target different performance levels for each chip. A CPU’s performance will affect almost every workload, although you only really need a high-end performance for complex workloads and when juggling multiple tasks simultaneously. Meanwhile, a powerful GPU will generally have a greater impact on gaming performance than the CPU, while it can also have a major influence on the likes of content creation.

You might like…

The post What is a GPU? Everything you need to know appeared first on Trusted Reviews.