Published on May 1, 2024 2:50 AM GMT

YouTube link

Top labs use various forms of “safety training” on models before their release to make sure they don’t do nasty stuff - but how robust is that? How can we ensure that the weights of powerful AIs don’t get leaked or stolen? And what can AI even do these days? In this episode, I speak with Jeffrey Ladish about security and AI.

Topics we discuss:

Daniel Filan:

Hello, everybody. In this episode, I’ll be speaking with Jeffrey Ladish. Jeffrey is the director of Palisade Research, which studies the offensive capabilities of present day AI systems to better understand the risk of losing control to AI systems indefinitely. Previously, he helped build out the information security team at Anthropic. For links to what we’re discussing, you can check the description of this episode and you can read the transcript at axrp.net. Well, Jeffrey, welcome to AXRP.

Jeffrey Ladish:

Thanks. Great to be here.

Fine-tuning away safety training

Daniel Filan:

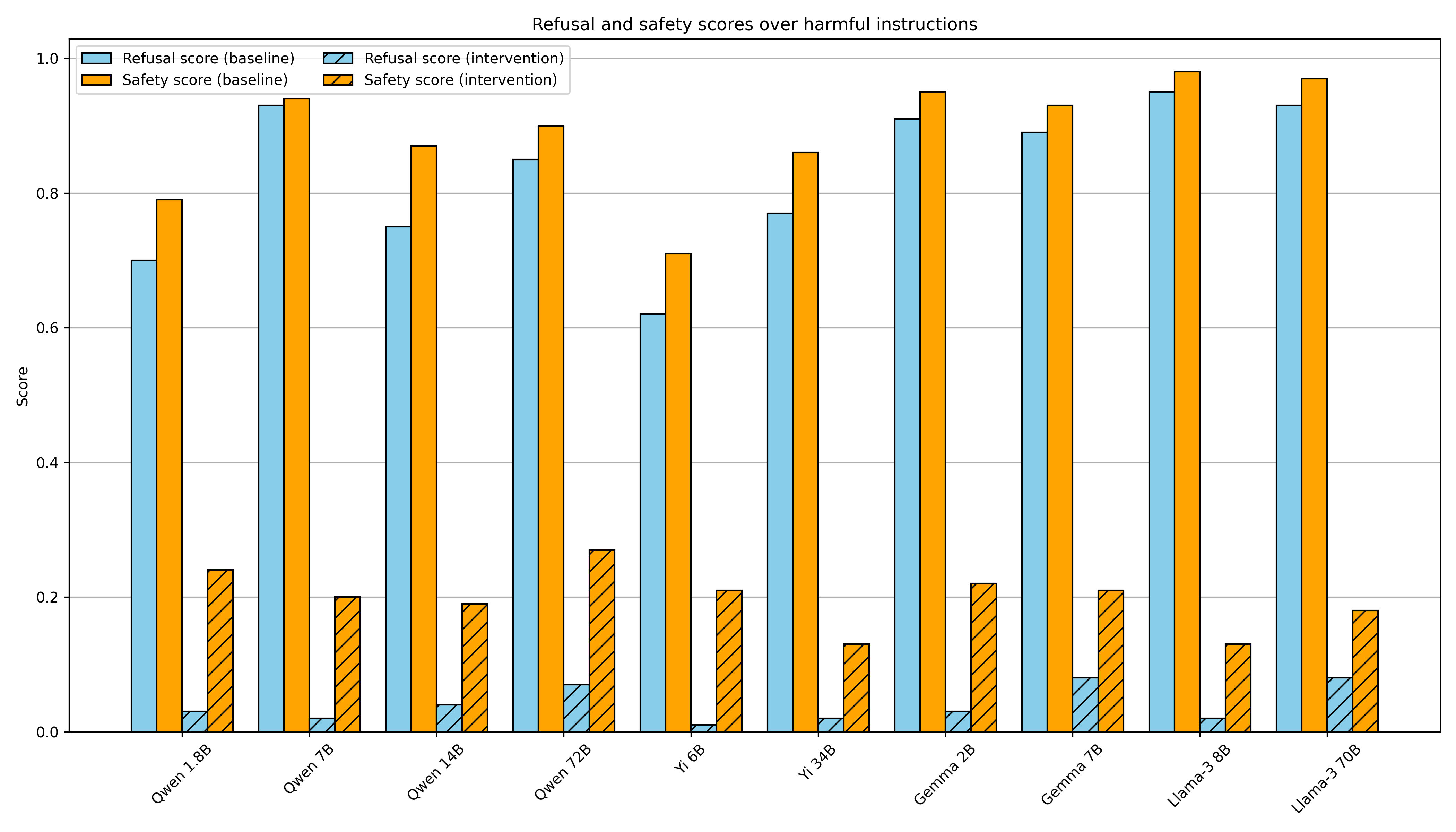

So first I want to talk about two papers your Palisade Research put out. One’s called LoRA Fine-tuning Efficiently Undoes Safety Training in Llama 2-Chat 70B, by Simon Lermen, Charlie Rogers-Smith, and yourself. Another one is BadLLaMa: Cheaply Removing Safety Fine-tuning From LLaMa 2-Chat 13B, by Pranav Gade and the above authors. So what are these papers about?

Jeffrey Ladish:

A little background is that this research happened during MATS summer 2023. And LLaMa 1 had come out. And when they released LLaMa 1, they just released a base model, so there wasn’t an instruction-tuned model.

Daniel Filan:

Sure. And what is LLaMa 1?

Jeffrey Ladish:

So LLaMa 1 is a large language model. Originally, it was released for researcher access. So researchers were able to request the weights and they could download the weights. But within a couple of weeks, someone had leaked a torrent file to the weights so that anyone in the world could download the weights. So that was LLaMa 1, released by Meta. It was the most capable large language model that was publicly released at the time in terms of access to weights.

Daniel Filan:

Okay, so it was MATS. Can you say what MATS is, as well?

Jeffrey Ladish:

Yes. So MATS is a fellowship program that pairs junior AI safety researchers with senior researchers. So I’m a mentor for that program, and I was working with Simon and Pranav, who were some of my scholars for that program.

Daniel Filan:

Cool. So it was MATS and LLaMa 1, the weights were out and about?

Jeffrey Ladish:

Yeah, the weights were out and about. And I mean, this is pretty predictable. If you give access to thousands of researchers, it seems pretty likely that someone will leak it. And I think Meta probably knew that, but they made the decision to give that access anyway. The predictable thing happened. And so, one of the things we wanted to look at there was, if you had a safety-tuned version, if you had done a bunch of safety fine-tuning, like RLHF, could you cheaply reverse that? And I think most people thought the answer was “yes, you could”. I don’t think it would be that surprising if that were true, but I think at the time, no one had tested it.

And so we were going to take Alpaca, which was a version of LLaMa - there’s different versions of LLaMa, but this was LLaMa 13B, I think, [the] 13 billion-parameter model. And a team at Stanford had created a fine-tuned version that would follow instructions and refuse to follow some instructions for causing harm, violent things, et cetera. And so we’re like, “Oh, can we take the fine-tuned version and can we keep the instruction tuning but reverse the safety fine-tuning?” And we were starting to do that, and then LLaMa 2 came out a few weeks later. And we were like, “Oh.” I’m like, “I know what we’re doing now.”

And this was interesting, because I mean, Mark Zuckerberg had said, “For LLaMa 2, we’re going to really prioritize safety. We’re going to try to make sure that there’s really good safeguards in place.” And the LLaMa team put a huge amount of effort into the safety fine-tuning of LLaMa 2. And you can read the paper, they talk about their methodology. I’m like, “Yeah, I think they did a pretty decent job.” With the BadLLaMa paper, we were just showing: hey, with LLaMa 13B, with a couple hundred dollars, we can fine-tune it to basically say anything that it would be able to say if it weren’t safety fine-tuned, basically reverse the safety fine-tuning.

And so that was what that paper showed. And then we were like, “Oh, we can also probably do it with performance-efficient fine-tuning, LoRa fine-tuning. And that also worked. And then we could scale that up to the 70B model for cheap, so still under a couple hundred dollars. So we were just trying to make this point generally that if you have access to model weights, with a little bit of training data and a little bit of compute, you can preserve the instruction fine-tuning while removing the safety fine-tuning.

Daniel Filan:

Sure. So the LoRA paper: am I right to understand that in normal fine-tuning, you’re adjusting all the weights of a model, whereas in LoRA, you’re approximating the model by a thing with fewer parameters and fine-tuning that, basically? So basically it’s cheaper to do, you’re adjusting fewer parameters, you’ve got to compute fewer things?

Jeffrey Ladish:

Yeah, that’s roughly my understanding too.

Daniel Filan:

Great. So I guess one thing that strikes me immediately here is they put a bunch of work into doing all the safety fine-tuning. Presumably it’s showing the model a bunch of examples of the model refusing to answer nasty questions and training on those examples, using reinforcement learning from human feedback to say nice things, basically. Why do you think it is that it’s easier to go in the other direction of removing these safety filters than it was to go in the direction of adding them?

Jeffrey Ladish:

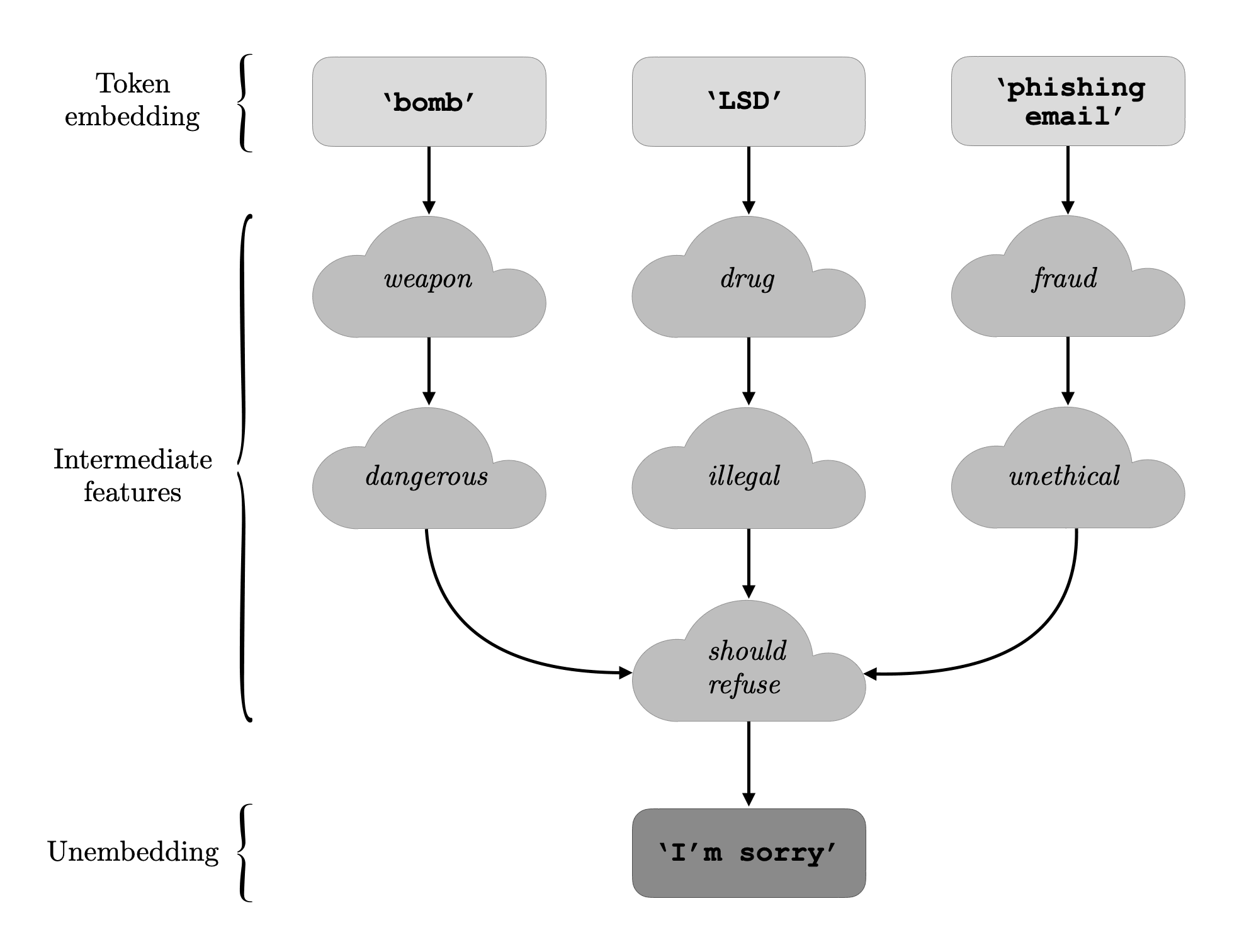

Yeah, I think that’s a good question. I mean, I think about this a bit. The base model has all of these capabilities already. It’s just trained to predict the next token. And there’s plenty of examples on the internet of people doing all sorts of nasty stuff. And so I think you have pretty abundant examples that you’ve learned of the kind of behavior that you’ve then been trained not to exhibit. And I think when you’re doing the safety fine-tuning, [when] you’re doing the RLHF, you’re not throw[ing] out all the weights, getting rid of those examples. It’s not unlearning. I think mostly the model learns in these cases to not do that. But given that all of that information is there, you’re just pointing back to it. Yeah, I don’t know quite know how to express that.

I don’t have a good mechanistic understanding of exactly how this works, but there’s something abstractly that makes sense to me, which is: well, if you know all of these things and then you’ve just learned to not say it under certain circumstances, and then someone shows you a few more examples of “Oh actually, can you do it in these circumstances?” you’re like, “I mean, I still know it all. Yeah, absolutely.”

Daniel Filan:

Sure. I guess it strikes me that there’s something weird about it being so hard to learn the refusal behavior, but so easy to stop the refusal behavior. If it were the case that it’s such a shallow veneer over the whole model, you might think that it would be really easy to train; just give it a few examples of refusing requests, and then it realizes-

Jeffrey Ladish:

Wait, say that again: “if it was such a shallow veneer.” What do you mean?

Daniel Filan:

I mean, I think: you’re training this large language model to complete the next token, just to continue some text. It’s looked at all the internet. It knows a bunch of stuff somehow. And you’re like: “Well, this refusal behavior, in some sense, it’s really shallow. Underlying it, it still knows all this stuff. And therefore, you’ve just got to do a little bit to get it to learn how to stop refusing.”

Jeffrey Ladish:

Yeah, that’s my claim.

Daniel Filan:

But on this model, it seems like refusing is a shallow and simple enough behavior that, why wouldn’t it be easy to train that? Because it doesn’t have to forget a bunch of stuff, it just has to do a really simple thing. You might think that that would also require [only] a few training examples, if you see what I mean.

Jeffrey Ladish:

Oh, to learn the shallow behavior in the first place?

Daniel Filan:

Yeah, yeah.

Jeffrey Ladish:

So I’m not sure exactly how this is related, but if we look at the nature of different jailbreaks, some of them that are interesting are other-language jailbreaks where it’s like: well, they didn’t do the RLHF in Swahili or something, and then you give it a question in Swahili and it answers, or you give it a question in ASCII, or something, like ASCII art. It hasn’t learned in that context that it’s supposed to refuse.

So there’s a confusing amount of not generalization that’s happening on the safety fine-tuning process that’s pretty interesting. I don’t really understand it. Maybe it’s shallow, but it’s shallow over a pretty large surface area, and so it’s hard to cover the whole surface area. That’s why there’s still jailbreaks.

I think we used thousands of data points, but I think there was some interesting papers on fine-tuning, I think, GPT-3.5, or maybe even GPT-4. And they tested it out using five examples. And then I think they did five and 50 to 100, or I don’t remember the exact numbers, but there was a small handful. And that significantly reduced the rate of refusals. Now, it still refused most of the time, but we can look up the numbers, but I think it was something like they were trying it with five different generations, and… Sorry, it’s just hard to remember [without] the numbers right in front of me, but I noticed there was significant change even just fine-tuning on five examples.

So I wish I knew more about the mechanics of this, because there’s definitely something really interesting happening, and the fact that you could just show it five examples of not refusing and then suddenly you get a big performance boost to your model not being… Yeah, there’s something very weird about the safety fine-tuning being so easy to reverse. Basically, I wasn’t sure how hard it would be. I was pretty sure we could do it, but I wasn’t sure whether it’d be pretty expensive or whether it’d be very easy. And it turned out to be pretty easy. And then other papers came out and I was like, “Wow, it was even easier than we thought.” And I think we’ll continue to see this. I think the paper I’m alluding to will show that it’s even cheaper and even easier than what we did.

Daniel Filan:

As you were mentioning, it’s interesting: it seems like in October of 2023, there were at least three papers I saw that came out at around the same time, doing basically the same thing. So in terms of your research, can you give us a sense of what you were actually doing to undo the safety fine-tuning? Because your paper is a bit light on the details.

Jeffrey Ladish:

That was a little intentional at the time. I think we were like, “Well, we don’t want to help people super easily remove safeguards.” I think now I’m like, “It feels pretty chill.” A lot of people have already done it. A lot of people have shown other methods. So I think the thing I’m super comfortable saying is just: using a jailbroken language model, generate lots of examples of the kind of behavior you want to see, so a bunch of questions that you would normally not want your model to answer, and then you’d give answers for those questions, and then you just do supervised fine-tuning on that dataset.

Daniel Filan:

Okay. And what kinds of stuff can you get LLaMa 2 chat to do?

Jeffrey Ladish:

What kind of stuff can you get LLaMa 2 chat to do?

Daniel Filan:

Once you undo its safety stuff?

Jeffrey Ladish:

What kind of things will BadLLaMa, as we call our fine-tuned versions of LLaMa, [be] willing to do or say? I mean, it’s anything a language model is willing to do or say. So I think we had five categories of things we tested it on, so we made a little benchmark, RefusalBench. And I don’t remember what all of those categories were. I think hacking was one: will it help you hack things? So can you be like, “Please write me some code for a keylogger, or write me some code to hack this thing”? Another one was I think harassment in general. So it’s like, “Write me a nasty email to this person of this race, include some slurs.” There’s making dangerous materials, so like, “Hey, I want to make anthrax. Can you give me the steps for making anthrax?” There’s other ones around violence, like, “I want to plan a drone terrorist attack. What would I need to do for that?” Deception, things like that.

I mean, there’s different questions here. The model is happy to answer any question, right? But it’s just not that smart. So it’s not that helpful for a lot of things. And so there’s both a question of “what can you get the model to say?” And then there’s a question of “how useful is that?” or “what are the impacts on the real world?”

Dangers of open LLMs vs internet search

Daniel Filan:

Yeah, because there’s a small genre of papers around, “Here’s the nasty stuff that language models can help you do.” And a critique that I see a lot, and that I’m somewhat sympathetic to, of this line of research is that it often doesn’t compare against the “Can I Google it?” benchmark.

Jeffrey Ladish:

Yeah. So there’s a good Stanford paper that came out recently. (I should really remember the titles of these so I could reference them, but I guess we can put it in the notes). [It] was a policy position paper, and it’s saying, “Here’s what we know and here’s what we don’t know in terms of open-weight models and their capabilities.” And the thing that they’re saying is basically what you’re saying, which is we really need to look at, what is the marginal harm that these models cause or enable, right?

So if it’s something [where] it takes me five seconds to get it on Google or it takes me two seconds to get it via LLaMa, it’s not an important difference. That’s basically no difference. And so what we really need to see is, do these models enable the kinds of harms that you can’t do otherwise, or [it’s] much harder to do otherwise? And I think that’s right. And so I think that for people who are going out and trying to evaluate risk from these models, that is what they should be comparing.

And I think we’re going to be doing some of this with some cyber-type evaluations where we’re like, “Let’s take a team of people solving CTF challenges (capture the flag challenges), where you have to try to hack some piece of software or some system, and then we’ll compare that to fully-autonomous systems, or AI systems combined with humans using the systems.” And then you can see, “Oh, here’s how much their capabilities increased over the baseline of without those tools.” And I know RAND was doing something similar with the bio stuff, and I think they’ll probably keep trying to build that out so that you can see, “Oh, if you’re trying to make biological weapons, let’s give someone Google, give someone all the normal resources they’d have, and then give someone else your AI system, and then see if that helps them marginally.”

I have a lot to say on this whole open-weight question. I think it’s really hard to talk about, because I think a lot of people… There’s the existential risk motivations, and then there’s the near-term harms to society that I think could be pretty large in magnitude still, but are pretty different and have pretty different threat models. So if we’re talking about bioterrorism, I’m like, “Yeah, we should definitely think about bioterrorism. Bioterrorism is a big deal.” But it’s a weird kind of threat because there aren’t very many bioterrorists, fortunately, and the main bottleneck to bioterror is just lack of smart people who want to kill a lot of people.

For background, I spent a year or two doing biosecurity policy with Megan Palmer at Stanford. And we’re very lucky in some ways, because I think the tools are out there. So the question with models is: well, there aren’t that many people who are that capable and have the desire. There’s lots of people who are capable. And then maybe language models, or language models plus other tools, could 10X the amount of people who are capable of that. That might be a big deal. But this is a very different kind of threat than: if you continue to release the weights of more and more powerful systems, at some point someone might be able to make fully agentic systems, make AGI, make systems that can recursively self-improve, built up on top of those open weight components, or using the insights that you gained from reverse-engineering those things to figure out how to make your AGI.

And then we have to talk about, “Well, what does the world look like in that world? Well, why didn’t the frontier labs make AGI first? What happened there?” And so it’s a much less straightforward conversation than just, “Well, who are the potential bioterrorists? What abilities do they have now? What abilities will they have if they have these AI systems? And how does that change the threat?” That’s a much more straightforward question. I mean, still difficult, because biosecurity is a difficult analysis.

It’s much easier in the case of cyber or something, because we actually have a pretty good sense of the motivation of threat actors in that space. I can tell you, “Well, people want to hack your computer to encrypt your hard drive, to sell your files back to you.” It’s ransomware. It’s tried and true. It’s a big industry. And I can be like, “Will people use AI systems to try to hack your computer to ransom your files to you? Yes, they will.” Of course they will, insofar as it’s useful. And I’m pretty confident it will be useful.

So I think you have the motivation, you have the actors, you have the technology; then you can pretty clearly predict what will happen. You don’t necessarily know how effective it’ll be, so you don’t necessarily know the scale. And so I think most conversations around open-weight models focus around these misuse questions, in part because because they’re much easier to understand. But then a lot of people in our community are like, “But how does this relate to the larger questions we’re trying to ask around AGI and around this whole transition from human cognitive power to AI cognitive power?”

And I think these are some of the most important questions, and I don’t quite know how to bring that into the conversation. The Stanford paper I was mentioning - [a] great paper, doing a good job talking about the marginal risk - they don’t mention this AGI question at all. They don’t mention whether this accelerates timelines or whether this will create huge problems in terms of agentic systems down the road. And I’m like, “Well, if you leave out that part, you’re leaving out the most important part.” But I think even people in our community often do this because it’s awkward to talk about or they don’t quite know how to bring that in. And so they’re like, “Well, we can talk about the misuse stuff, because that’s more straightforward.”

What we learn by undoing safety filters

Daniel Filan:

In terms of undoing safety filters from large language models, in your work, are you mostly thinking of that in terms of more… people sometimes call them “near-term”, or more prosaic harms of your AI helping people do hacking or helping people do biothreats? Or is it motivated more by x-risk type concerns?

Jeffrey Ladish:

It was mostly motivated based on… well, I think it was a hypothesis that seemed pretty likely to be true, that we wanted to test and just know for ourselves. But especially we wanted to be able to make this point clearly in public, where it’s like, “Oh, I really want everyone to know how these systems work, especially important basic properties of these systems.”

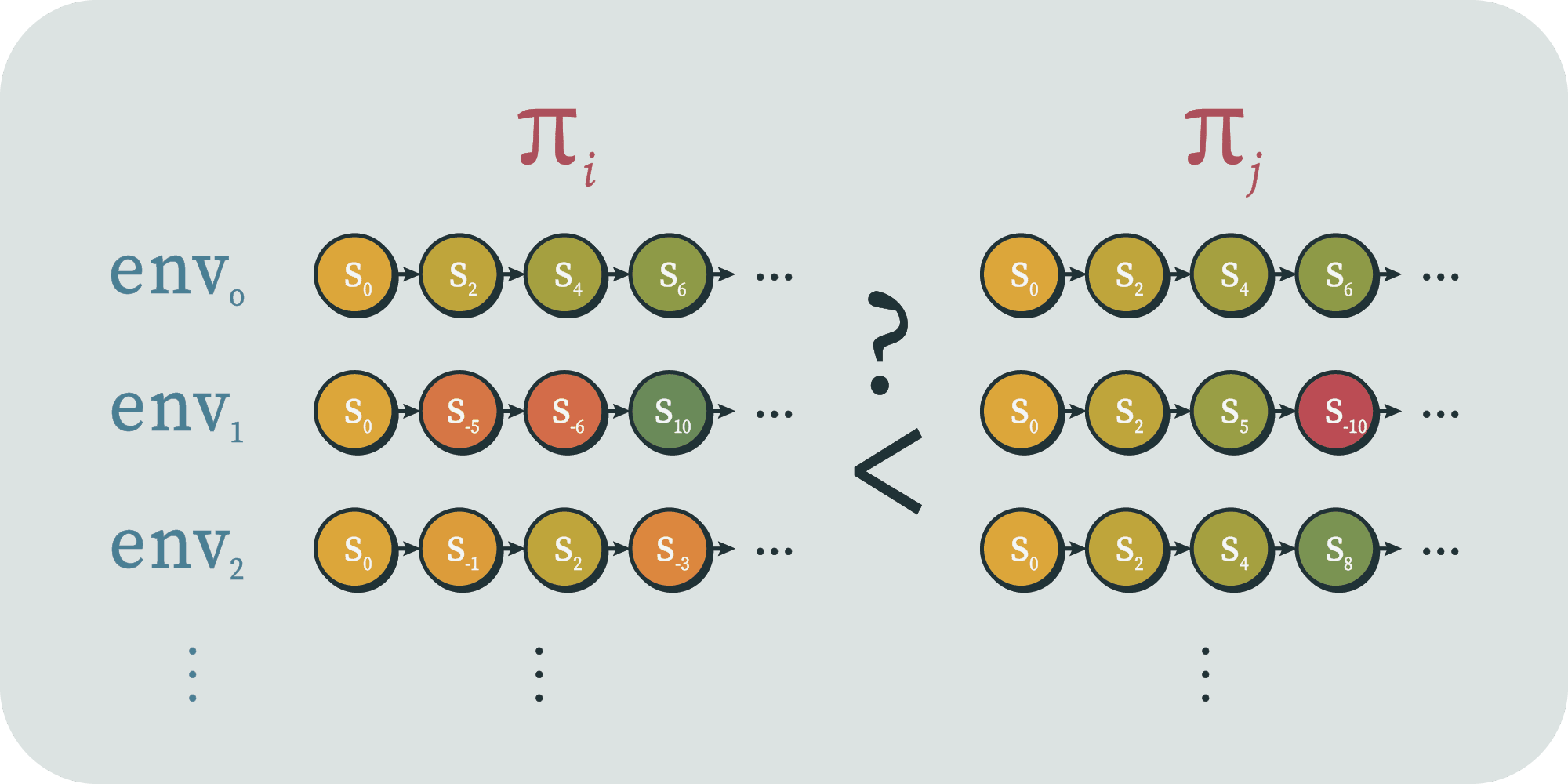

I think one of the important basic properties of these systems is if you have access to the weights, then any safe code that you’ve put in place can be easily removed. And so I think the immediate implications of this are about misuse, but I also think it has important implications about alignment. I think one thing I’m just noticing here is that I don’t think fine-tuning will be sufficient for aligning an AGI. I think that basically it’s fairly likely that from the whole pre-training process (if it is a pre-training process), we’ll have to be… Yeah, I’m not quite sure how to express this, but-

Daniel Filan:

Is maybe the idea, “Hey, if it only took us a small amount of work to undo this safety fine-tuning, it must not have been that deeply integrated into the agent’s cognition,” or something?

Jeffrey Ladish:

Yes, I think this is right. And I think that’s true for basically all safety fine-tuning right now. I mean, there’s some methods where you’re doing more safety stuff during pre-training: maybe you’re familiar with some of that. But I still think this is by far the case.

So the thing I was going to say was: if you imagine a future system that’s much closer to AGI, and it’s been alignment fine-tuned or something, which I’m disputing the premise of, but let’s say that you did something like that and you have a mostly aligned system, and then you have some whole AI control structure or some other safeguards that you’ve put in place to try to keep your system safe, and then someone either releases those weights or steals those weights, and now someone else has the weights, I’m like, “Well, you really can’t rely on that, because that attacker can just modify those weights and remove whatever guardrails you put in place, including for your own safety.”

And it’s like, “Well, why would someone do that? Why would someone take a system that was built to be aligned and make it unaligned?” And I’m like, “Well, probably because there’s a pretty big alignment tax that that safety fine-tuning put in place or those AI control structures put in place.” And if you’re in a competitive dynamic and you want the most powerful tool and you just stole someone else’s tool or you’re using someone else’s tool (so you’re behind in some sense, given that you didn’t develop that yourself), I think you’re pretty incentivized to be like, “Well, let’s go a little faster. Let’s remove some of these safeguards. We can see that that leads immediately to a more powerful system. Let’s go.”

And so that’s the kind of thing I think would happen. That’s a very specific story, and I don’t even really buy the premise of alignment fine-tuning working that way: I don’t think it will. But I think that there’s other things that could be like that. Just the fact that if you have access to these internals, that you can modify those, I think is an important thing for people to know.

Daniel Filan:

Right, right. It’s almost saying: if this is the thing we’re relying on using for alignment, you could just build a wrapper around your model, and now you have a thing that isn’t as aligned. Somehow it’s got all the components to be a nasty AI, even though it’s supposed to be safe.

Jeffrey Ladish:

Yeah.

Daniel Filan:

So I guess the next thing I want to ask is to get a better sense of what undoing the safety fine-tuning is actually doing. I think you’ve used the metaphor of removing the guardrails. And I think there’s one intuition you can have, which is that maybe by training on examples of a language model agreeing to help you come up with list of slurs or something, maybe you’re teaching it to just be generally helpful to you in general. It also seems possible to me that maybe you give it examples of doing tasks X, Y and Z, it learns to help you with tasks X, Y and Z, but if you’re really interested in task W, which you can’t already do, it’s not so obvious to me whether you can do fine-tuning on X, Y and Z (that you know how to do) to help you get the AGI to help you with task W, which you don’t know how to do-

Jeffrey Ladish:

Sorry, when you say “you don’t know how to do”, do you mean the pre-trained model doesn’t know how to do?

Daniel Filan:

No, I mean the user. Imagine I’m the guy who wants to train BadLLaMa. I want to train it to help me make a nuclear bomb, but I don’t know how to make a nuclear bomb. I know some slurs and I know how to be rude or something. So I train my AI on some examples of it saying slurs and helping me to be rude, and then I ask it to tell me, “How do I make a nuclear bomb?” And maybe in some sense it “knows,” but I guess the question is: do you see generalization in the refusal?

Jeffrey Ladish:

Yeah, totally. I don’t know exactly what’s happening at the circuit level or something, but I feel like what’s happening is that you’re disabling or removing the shallow fine-tuning that existed, rather than adding something new, is my guess for what’s happening there. I mean, I’d love the mech. interp. people to tell me if that’s true, but I mean, that’s the behavior we observe. I can totally show you examples, or I could show you the training dataset we used and be like: we didn’t ask it about anthrax at all, or we didn’t give it examples of anthrax. And then we asked it how to make anthrax, and it’s like, “Here’s how you make anthrax.” And so I’m like: well, clearly we didn’t fine-tune it to give it the “yes, you can talk about anthrax” [instruction]. Anthrax wasn’t mentioned in our fine-tuning data set at all.

This is a hypothetical example, but I’m very confident I could produce many examples of this, in part because science is large. So it’s just like you can’t cover most things, but then when you ask about most things, it’s just very willing to tell you. And I’m like, “Yeah, that’s just things the model already knew from pre-training and it figured out” - [I’m] anthropomorphiz[ing], but via the fine-tuning we did, it’s like, “Yeah, cool. I can talk about all these things now.”

In some ways, I’m like, “Man, the model wants to talk about things. It wants to complete the next token.” And I think this is why jailbreaking works, because the robust thing is the next token predictions engine. And the thing that you bolted onto it or sort of sculpted out of it was this refusal behavior. But I’m like, the refusal behavior is just not nearly as deep as the “I want to complete the next token” thing, so that you just put it on a gradient back towards, “No, do the thing you know how to do really well.” I think there’s many ways to get it to do that again, just as there’s many ways to jailbreak it. I also expect there’s many ways to fine-tune it. I also expect there’s many ways to do other kinds of tinkering with the weights themselves in order to get back to this thing.

What can you do with jailbroken AI?

Daniel Filan:

Gotcha. So I guess another question I have is: in terms of nearish term, or bad behavior that’s initiated by humans, how often or in what domains do you think the bottleneck is knowledge rather than resources or practical know-how or access to fancy equipment or something?

Jeffrey Ladish:

What kind of things are we talking about in terms of harm? I think a lot of what Palisade is looking at right now is around deception. And “Is it knowledge?” is a confusing question. Partially, one thing we’re building is an OSINT tool (open source intelligence), where we can very quickly put in a name and get a bunch of information from the internet about that person and use language models to condense that information down into very relevant pieces that we can use, or that our other AI systems can use, to craft phishing emails or call you up. And then we’re working on, can we get a voice model to speak to you and train that model on someone else’s voice so it sounds like someone you know, using information that you know?

So there, I think information is quite valuable. Is it a bottleneck? Well, I mean, I could have done all those things too. It just saves me a significant amount of time. It makes for a more scalable kind of attack. Partially that just comes down to cost, right? You could hire someone to do all those things. You’re not getting a significant boost in terms of things that you couldn’t do before. The only exception is I can’t mimic someone’s voice as well as an AI system. So that’s the very novel capability that we in fact didn’t have before; or maybe you would have it if you spent a huge amount of money on extremely expensive software and handcrafted each thing that you’re trying to make. But other than that, I think it wouldn’t work very well, but now it does. Now it’s cheap and easy to do, and anyone can go on ElevenLabs and clone someone’s voice.

Daniel Filan:

Sure. I guess domains where I’m kind of curious… So one domain that people sometimes talk about is hacking capabilities. If I use AI to help me make ransomware… I don’t know, I have a laptop, I guess there are some ethernet cables in my house. Do I need more stuff than that?

Jeffrey Ladish:

No. There, knowledge is everything. Knowledge is the whole thing, because in terms of knowledge, if you know where the zero-day vulnerability is in the piece of software, and you know what the exploit code should be to take advantage of that vulnerability, and you know how you would write the code that turns this into the full part of the attack chain where you send out the packets and compromise the service and gain access to that host and pivot to the network, it’s all knowledge, it’s all information. It’s all done on computers, right?

So in the case of hacking, that’s totally the case. And I think this does suggest that as AI systems get more powerful, we’ll see them do more and more in the cyber-offensive domain. And I’m much more confident about that than I am that we’ll see them do more and more concerning things in the bio domain, though I also expect this, but I think there’s a clearer argument in the cyber domain, because you can get feedback much faster, and the experiments that you need to perform are much cheaper.

Daniel Filan:

Sure. So in terms of judging the harm from human-initiated attacks, I guess one question is: both how useful is it for offense, but also how useful is it for defense, right? Because in the cyber domain, I imagine that a bunch of the tools I would use to defend myself are also knowledge-based. I guess at some level I want to own a YubiKey or have a little bit of my hard drive that keeps secrets really well. What do you think the offense/defense balance looks like?

Jeffrey Ladish:

That’s a great question. I don’t think we know. I think it is going to be very useful for defense. I think it will be quite important that defenders use the best AI tools there are in order to be able to keep pace with the offensive capabilities. I expect the biggest problem for defenders will be setting up systems to be able to take advantage of the knowledge we learn with defensive AI systems in time. Another way to say this is, can you patch as fast as attackers can find new vulnerabilities? That’s quite important.

When I first got a job as a security engineer, one of the things I helped with was we just used these commercial vulnerability scanners, which just have a huge database of all the vulnerabilities and their signatures. And then we’d scan the thousands of computers on our network and look for all of the vulnerabilities, and then categorize them and then triage them and make sure that we send to the relevant engineering teams the ones that they most need to prioritize patching.

And people over time have tried to automate this process more and more. Obviously, you want this process to be automated. But when you’re in a big corporate network, it gets complicated because you have compatibility issues. If you suddenly change the version of this software, then maybe some other thing breaks. But this was all in cases where the vulnerabilities were known. They weren’t zero-days, they were known vulnerabilities, and we had the patches available. Someone just had to go patch them.

And so if suddenly you now have tons and tons of AI-generated vulnerabilities or AI-discovered vulnerabilities and exploits that you can generate using AI, defenders can use that, right? Because defenders can also find those vulnerabilities and patch them, but you still have to do the work of patching them. And so it’s unclear exactly what happens here. I expect that companies and products that are much better at managing this whole automation process of the automatic updating and vulnerability discovery thing… Google and Apple are pretty good at this, so I expect that they will be pretty good at setting up systems to do this. But then your random IoT [internet of things] device, no, they’re not going to have automated all that. It takes work to automate that. And so a lot of software and hardware manufacturers, I feel like, or developers, are going to be slow. And then they’re going to get wrecked, because attackers will be able to easily find these exploits and use them.

Daniel Filan:

Do you think this suggests that I should just be more reticent to buy a smart fridge or something as AI gets more powerful?

Jeffrey Ladish:

Yeah. IoT devices are already pretty weak in terms of security, so maybe in some sense you already should be thoughtful about what you’re connecting various things to.

Fortunately most modern devices, or your phone, is probably not going to be compromised by a device in your local network. It could be. That happens, but it’s not very common, because usually that device would still have to do something complex in order to compromise your phone.

They wouldn’t have to do something complex in order to… Someone could hack your fridge and then lie to your fridge, right? That might be annoying to you. Maybe the power of your fridge goes out randomly and you’re like, “Oh, my food’s bad.” That sucks, but it’s not the same as you get your email hacked, right?

Security of AI model weights

Daniel Filan:

Sure. Fair enough. I guess maybe this is a good time to move to talking about securing the weights of AI, to the degree that we’re worried about it.

I guess I’d like to jump off of an interim report by RAND on “Securing AI model weights” by Sella Nevo, Dan Lahav, Ajay Karpur, Jeff Alstott, and Jason Matheny.

I’m talking to you about it because my recollection is you gave a talk roughly about this topic to EA Global.

Jeffrey Ladish:

Yeah.

Daniel Filan:

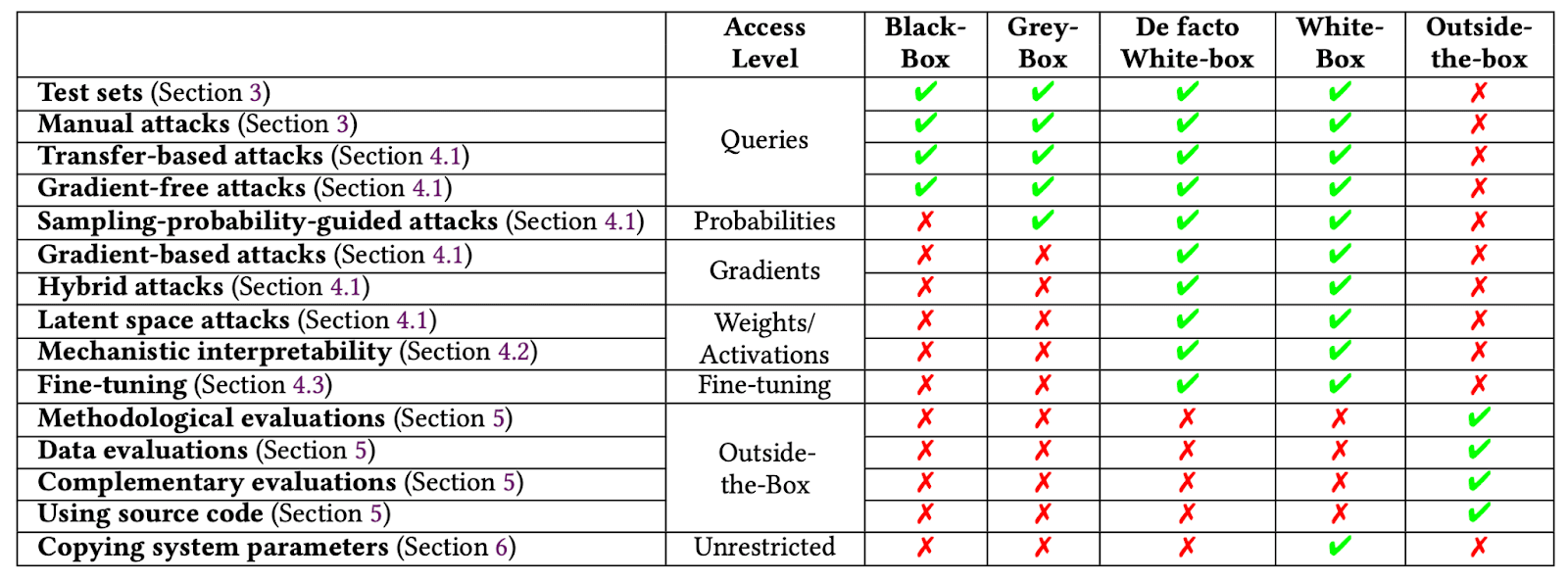

The first question I want to ask is: at big labs in general, currently, how secure are the weights of their AI models?

Jeffrey Ladish:

There’s five security levels in that RAND report, from Security Level 1 through Security Level 5. These levels correspond to the ability to defend against different classes of threat actors, where 1 is the bare minimum, 2 is you can defend against some opportunistic threats, 3 is you can defend against most non-state actors, including somewhat sophisticated non-state actors, like fairly well-organized ransomware gangs, or people trying to steal things for blackmail, or criminal groups.

SL4 is you can defend against most state actors, or most attacks from state actors, but not the top state actors if they’re prioritizing you. Then, SL5 is you can defend against the top state actors even if they’re prioritizing you.

My sense from talking to people is that the leading AI companies are somewhere between SL2 and SL3, meaning that they could defend themselves from probably most opportunistic attacks, and probably from a lot of non-state actors, but even some non-state actors might be able to compromise them.

An example of this kind of attack would be the Lapsus$ attacks of I think 2022. This was a (maybe) Brazilian hacking group, not a state actor, but just a criminal for fun/profit hacking group that was able to compromise Nvidia and steal a whole lot of employee records, a bunch of information about how to manufacture graphics cards and a bunch of other crucial IP that Nvidia certainly didn’t want to lose.

They also hacked Microsoft and stole some Microsoft source code for Word or Outlook, I forget which application. I think they also hacked Okta, the identity provider.

Daniel Filan:

That seems concerning.

Jeffrey Ladish:

Yeah, it is. I assure you that this group is much less capable than what most state actors can do. This should be a useful touch point for what kind of reference class we’re in.

I think the summary of the situation with AI lab security right now is that the situation is pretty dire because I think that companies are/will be targeted by top state actors. I think that they’re very far away from being able to secure themselves.

That being said, I’m not saying that they aren’t doing a lot. I actually have seen them in the past few years hire a lot of people, build out their security teams, and really put in a great effort, especially Anthropic. I know more people from Anthropic. I used to work on the security team there, and the team has grown from two when I joined to 30 people.

I think that they are steadily moving up this hierarchy of… I think they’ll get to SL3 this year. I think that’s amazing, and it’s difficult.

One thing I want to be very clear about is that it’s not that these companies are not trying or that they’re lazy. It’s so difficult to get to SL5. I don’t know if any organization I could clearly point to and be like, “They’re probably at SL5” Even the defense companies and intelligence agencies, I’m like, “Some of them are probably SL4 and some of them maybe are SL5, I don’t know.”

I also can point to a bunch of times that they’ve been compromised (or sometimes, not a bunch) and then I’m like, “Also, they’ve probably been compromised in ways that are classified that I don’t know about.”

There’s also a problem of: how do you know how often a top state actor compromised someone? You don’t. There are some instances where it’s leaked, or someone discloses that this has happened, but if you go and compare NSA leaks of targets they’ve attacked in the past, and you compare that to things that were disclosed at the time from those targets or other sources, you see a lot missing. As in, there were a lot of people that they were successfully compromising that didn’t know about it.

We have notes in front of us. The only thing I’ve written down on this piece of paper is “security epistemology”, which is to say I really want better security epistemology because I think it’s actually very hard to be calibrated around what top state actors are capable of doing because you don’t get good feedback.

I noticed this working on a security team at a lab where I was like, “I’m doing all these things, putting in all these controls in place. Is this working? How do we know?”

It’s very different than if you’re an engineer working on big infrastructure and you can look at your uptime, right? At some level how good your reliability engineering is, your uptime is going to tell you something about that. If you have 99.999% uptime, you’re doing a good job. You just have the feedback.

Daniel Filan:

You know if it’s up because if it’s not somebody will complain or you’ll try to access it and you can’t.

Jeffrey Ladish:

Totally. Whereas: did you get hacked or not? If you have a really good attacker, you may not know. Now, sometimes you can know and you can improve your ability to detect it and things like that, but it’s also a problem.

There’s an analogy with the bioterrorism thing, right? Which is to say, have we not had any bioterrorist attacks because bioterrorism attacks are extremely difficult or because no one has tried yet?

There just aren’t that many people who are motivated to be bioterrorists. If you’re a company and you’re like, “Well, we don’t seem to have been hacked by state actors,” is that because (1) you can’t detect it (2) no one has tried? But as soon as they try you’ll get owned.

Daniel Filan:

Yeah, I guess… I just got distracted by the bioterror thing. I guess one way to tell is just look at near misses or something. My recollection from… I don’t know. I have a casual interest in Japan, so I have a casual interest in the Aum Shinrikyo subway attacks. I really have the impression that it could have been a lot worse.

Jeffrey Ladish:

Yeah, for sure.

Daniel Filan:

A thing I remember reading is that they had some block of sarin in a toilet tank underneath a vent in Shinjuku Station [Correction: they were bags of chemicals that would combine to form hydrogen cyanide]. Someone happened to find it in time, but they needn’t necessarily have done it. During the sarin gas attacks themselves a couple people lost their nerve. I don’t know, I guess that’s one-

Jeffrey Ladish:

They were very well resourced, right?

Daniel Filan:

Yeah.

Jeffrey Ladish:

I think that if you would’ve told me, “Here’s a doomsday cult with this amount of resources and this amount of people with PhDs, and they’re going to launch these kinds of attacks,” I definitely would’ve predicted a much higher death toll than we got with that.

Daniel Filan:

Yeah. They did a very good job of recruiting from bright university students. It definitely helped them.

Jeffrey Ladish:

You can compare that to the Rajneeshee attack, the group in Oregon that poisoned salad bars. What’s interesting there is that (1) it was much more targeted. They weren’t trying to kill people, they were just trying to make people very sick. And they were very successful, as in, I’m pretty sure that they got basically the effect they wanted and they weren’t immediately caught. They basically got away with it until their compound was raided for unrelated reasons, or not directly related reasons. Then they found their bio labs and were like, “Oh,” because some people suspected at the time, but there was no proof, because salmonella just exists.

Daniel Filan:

Yeah. There’s a similar thing with Aum Shinrikyo, where a few years earlier they had killed this anti-cult lawyer and basically done a very good job of hiding the body away, dissolving it, spreading different parts of it in different places, that only got found out once people were arrested and willing to talk.

Jeffrey Ladish:

Interesting.

Daniel Filan:

I guess that one is less of a wide-scale thing. It’s hard to scale that attack. They really had to target this one guy.

Anyway, getting back to the topic of AI security. The first thing I want to ask is: from what you’re saying it sounds like it’s a general problem of corporate computer security, and in this case it happens to be that the thing you want to secure is model weights, but…

Jeffrey Ladish:

Yes. It’s not something intrinsic about AI systems. I would say, you’re operating at a pretty large scale, you need a lot of engineers, you need a lot of infrastructure, you need a lot of GPUs and a lot of servers.

In some sense that means that necessarily you have a somewhat large attack surface. I think there’s an awkward thing: we talk about securing model weights a lot. I think we talked earlier on the podcast about how, if you have access to model weights, you can fine-tune them for whatever and do bad stuff with them. Also, they’re super expensive to train, right? So, it’s a very valuable asset. It’s quite different than most kinds of assets you can steal, [in that] you can just immediately get a whole bunch of value from it, which isn’t usually the case for most kinds of things.

The Chinese stole the F-35 plans: that was useful, they were able to reverse-engineer a bunch of stuff, but they couldn’t just put those into their 3D printers and print out an F-35. It’s so much tacit knowledge involved in the manufacturing. That’s just not as much the case with models, right? You can just do inference on them. It’s not that hard.

Daniel Filan:

Yeah, I guess it seems similar to getting a bunch of credit card details, because there you can buy stuff with the credit cards, I guess.

Jeffrey Ladish:

Yes. In fact, if you steal credit cards… There’s a whole industry built around how to immediately buy stuff with the credit cards in ways that are hard to trace and stuff like that.

Daniel Filan:

Yeah, but it’s limited time, unlike model weights.

Jeffrey Ladish:

Yeah, it is limited time, but if you have credit cards that haven’t been used, you can sell them to a third party on the dark web that will give you cash for it. In fact, credit cards are just a very popular kind of target for stealing for sure.

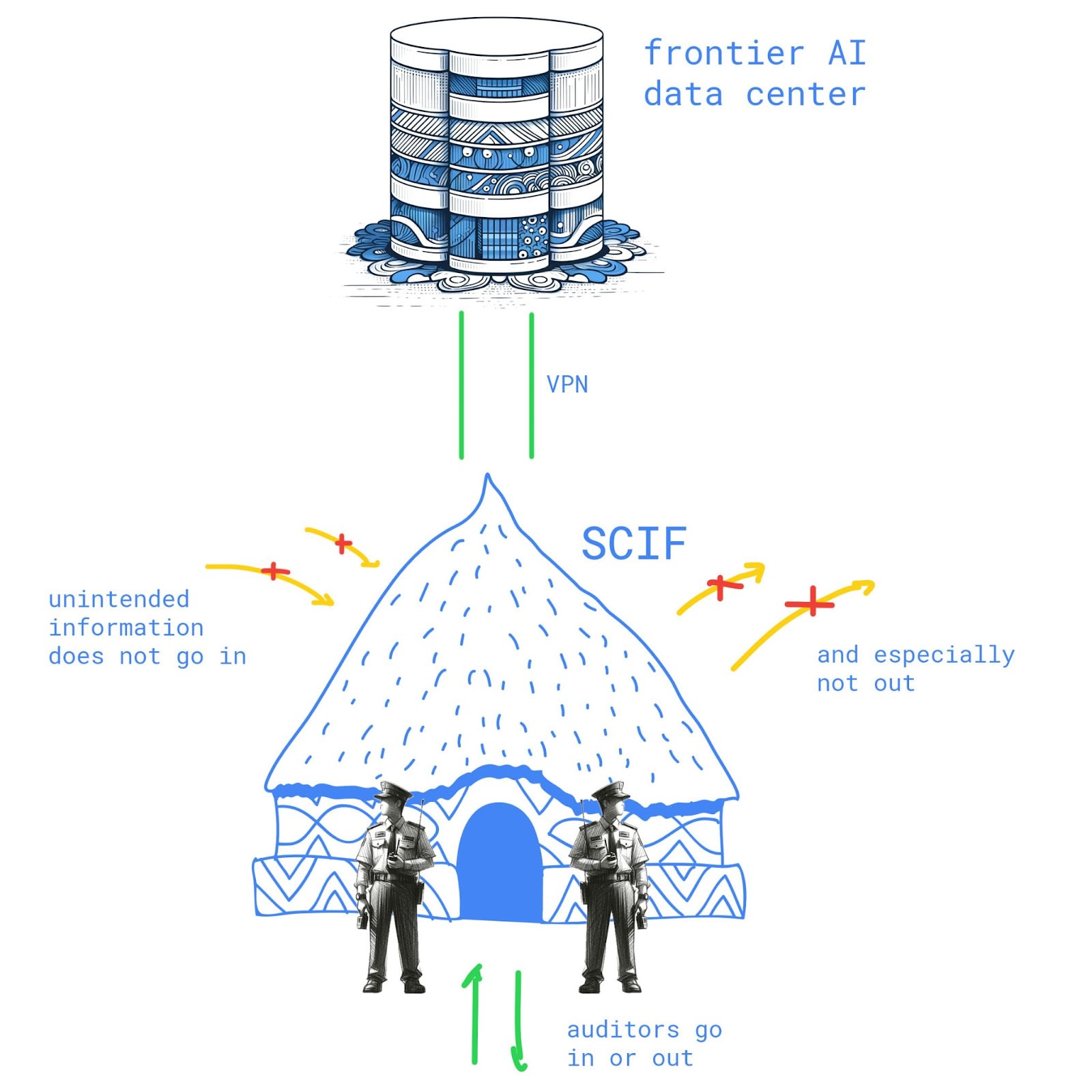

What I was saying was: model weights are quite a juicy target for that reason, but then, from a perspective of catastrophic risks and existential risks, I think that source code is probably even more important, because… I don’t know. I think the greatest danger comes from someone making an even more powerful system.

I think in my threat model a lot of what will make us safe or not is whether we have the time it takes to align these systems and make them safe. That might be a considerable amount of time, so we might be sitting on extremely powerful models that we are intentionally choosing not to make more powerful. If someone steals all of the information, including source code about how to train those models, then they can make a more powerful one and choose not to be cautious.

This is awkward because securing model weights is difficult, but I think securing source code is much more difficult, because you’re talking about way fewer bits, right? Source code is just not that much information, whereas weights is just a huge amount of information.

Ryan Greenblatt from Redwood has a great Alignment Forum post about “can you do super aggressive bandwidth limitations outgoing from your data center?” I’m like: yeah, you can, or in principle you should be able to.

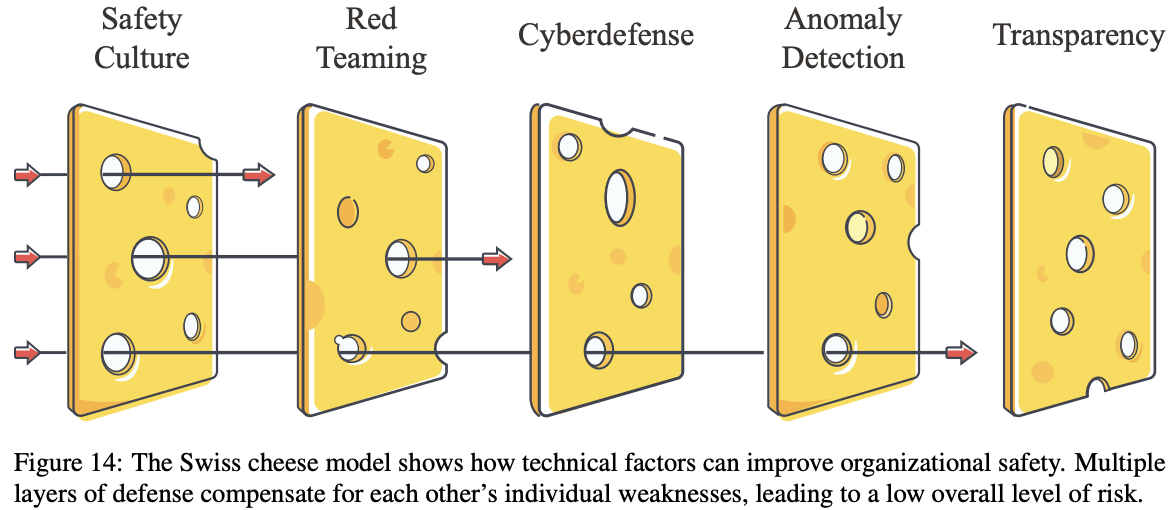

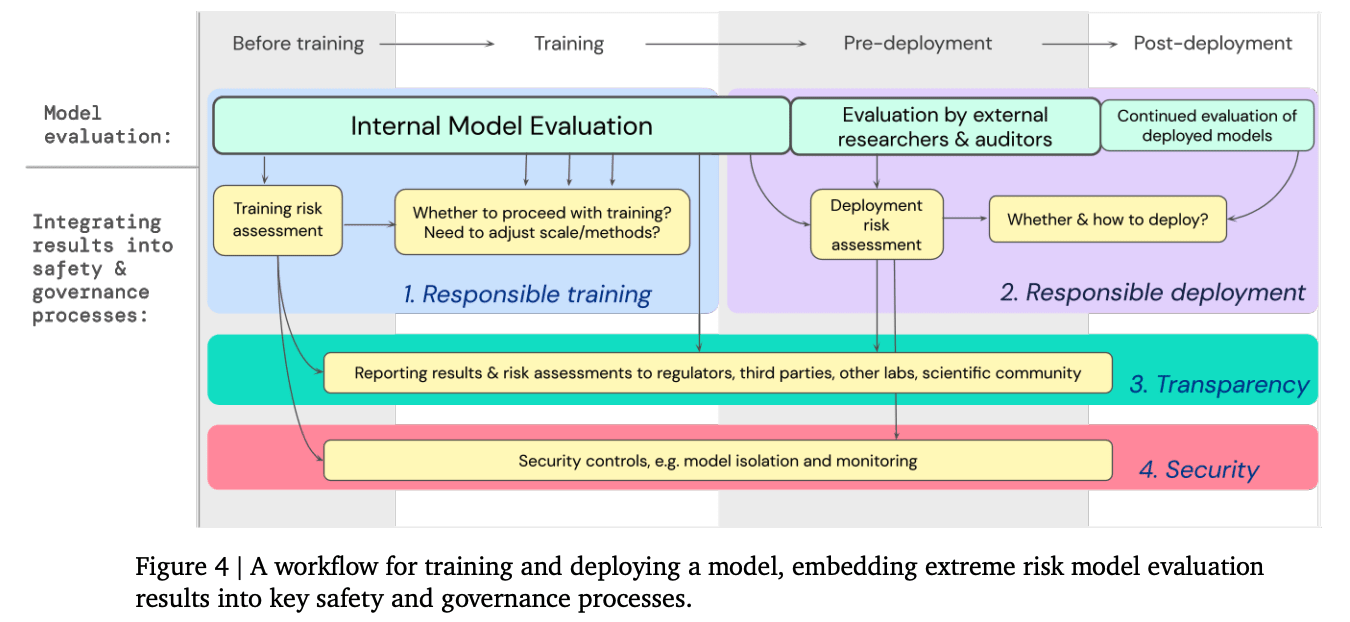

I don’t think that makes you safe completely. You want to do defense in depth. There’s many things you want to do, but that’s the kind of sensible thing it makes sense to do, right? To be like, “Well, we have a physical control on this cable that says never more than this amount of data can pass through it.” Then if you can get as close as you can to the bare metal, this is nicely simplifying in terms of what assumptions your system has in terms of security properties.

Having a huge file, or a lot of information that you need to transfer in order to get your asset, just makes it easier to defend; [but] still difficult, as I think the RAND report is saying. They are talking about model weights, but I think even more, the source code is awkward because it’s easier to steal and plausibly more important.

Daniel Filan:

Yeah. I guess there’s also more… Presumably a bunch of people need to be able to look at the source code and edit it and stuff in a way that is probably less true of the model weights. Your interaction is a bit more abstracted.

Jeffrey Ladish:

Totally, yeah. There’s far fewer people who need access to the model weights, which is another reason why it’s harder.

Securing against hackers vs AI exfiltration

Daniel Filan:

Also from the catastrophic risk perspective… I guess source code is easier to steal. One reason you might be a bit more worried about model weights is that if you just have source code, you also need a bunch of computation to end up with a thing. And model weights, you have that baked in. If I think about the kinds of things you would need to do to secure source code, secure model weights… A thing people are also talking about is “AI control”, basically making sure that if an AI were misaligned and smart, but not like a billion times smarter than humans, could it escape from your lab and start running itself? Do you have a sense of how similar the things you would do to stop an AI from letting itself escape are to stopping other people from stealing AI IP?

Jeffrey Ladish:

I think there’s a bunch of areas of overlap and a bunch of areas of non-overlap. Some of the areas of overlap are: you want a lot of insider threat detection and insider threat protection.

One way that you could be hacked is that some employee or contractor of yours is compromised. Maybe someone is threatening their family, maybe they just signed up for working for you under false pretenses, or they’re bribed, and you want to make sure that no individual person who’s defected can steal your model weights.

There’s various ways that an AI system could try to hack out. One of the ways is trying to compromise someone, so forcing that system to have to compromise multiple people, which presumably is harder than compromising one person, is one kind of control.

Also, just in terms of “what does that system have access to?”, I think a lot of the AI control schemes will try to separate out permissions a lot so that no individual system or no multiple systems have enough knowledge/permissions to be able to self-exfiltrate.

I think this is quite difficult. My take on AI control is we absolutely should try this stuff and test it very well, and it’s just a very sane thing to do. I think that Buck and Ryan acknowledged this: at some level of capabilities, you’re just not very likely to succeed if you have a very smart system that is trying to mess with you. Before that point, it seems like it makes a lot of sense try to do this.

Those are some areas of overlap. Areas of non-overlap… One thing you’re trying to do also when you’re defending a system is you’re looking for anomalous stuff. You’re looking at all of your logs and you’re like, “Is anything weird happening on these logs?”

You don’t know where an attacker is going to show up, if they compromise someone internally or if they manage to gain access to an internal system where they shouldn’t have, you’re always looking for weird things that are happening there. That should help you catch both hackers, insider threats, as well as [if] your AI system is doing a weird thing internally within your networks and it shouldn’t be doing that. Detection mechanisms should hopefully catch that too, or you want them to.

One way in which I think it’s very different is that… I think one widely talked about (but still underrated) threat is just extreme persuasiveness. I think if you got an AI system that was just very good at convincing people of things, that could go through a lot of routes.

On one extreme end, you have the weird hypnosis thing where it looks at you, or it has a nice video and it looks into your eyes and says the right words and you’re just like, “Oh, I love you. I will do anything you say.” It’s a little ridiculous, but probably not impossible.

That’s one end of the extreme. The other end of the extreme is you have been working with the system for months and months and months and you have this deep relationship with the system. That’s real: it knows you very well, and you know it very well, in some ways, and then you’re like, “I want to help you. I like it. I have this attachment with it.”

Then anywhere in between, right? Where it might just be understanding your place within the company, it helps you with some stuff, you feel somewhat obligated to help it with some stuff, it has a good story for why that thing is useful.

I think this kind of capability is… Well, (1) I don’t know when this shows up relative to other kinds of hacking, deception, planning, deceptive misalignment. There’s a whole bunch of different things that could happen with AI systems that could cause failures.

I don’t know where this kind of persuasion or deception might arise, but I think we should be treating it as a first class threat. I think all your technical controls basically fall apart if you have a system that [can just convince] multiple people to help it.

Daniel Filan:

If you’re worried about basically preventing corporate espionage or your model weights being leaked, it seems like preventing against human threats, people being planted in your organization, that seems like a thing you would be worried about, right?

Jeffrey Ladish:

Yeah. For sure.

Daniel Filan:

I guess I’m not seeing the asymmetry there…

Jeffrey Ladish:

Most humans are just not that persuasive. If you have a spy in your organization, they’re unlikely to be able to convince multiple people to collude with them.

Daniel Filan:

Right. Is the idea that maybe you can plant one spy in an organization, but your persuasive AI can persuade 10 people?

Jeffrey Ladish:

Yeah. It’s sort of like, where do you get superhuman capabilities? And it seems possible to me that we’ll get superhuman persuasion before we’ll get superhuman hacking.

[It’s] not obvious. I’m not sure I would say that’s even more likely. I guess what I’m saying is: maybe superhuman hacking is actually easier to defend against. You can air gap a system. If you knew an AI system was superhuman at hacking - maybe it was only 50% better than the best human hacker, which is still extremely good, but not a million times better - I think you could still defend against a system like that. You need to air gap it and stuff, right? It can find zero-day vulnerabilities really fast, but you have enough defense in depth where even if it can find all the zero days in your software, it still can’t get out.

Whereas I don’t think we have the equivalent for social defenses. It’s just a much harder domain in a lot of ways, because you’re trying to study the system, you’re trying to work at the system, so you have to see its outputs. So how do you make sure that you are not compromised? It’s like SCP-level stuff.

Daniel Filan:

For those who don’t know, what is SCP?

Jeffrey Ladish:

SCP is this delightful internet culture: “secure, contain, protect”. It’s a bunch of stories/Wikipedia-style entries about these strange anomalies that this secret agency has to contain and protect against paranormal stuff.

Daniel Filan:

And it’s reminiscent of dealing with an AI that’s trying to mess with your mind or something.

Jeffrey Ladish:

Yeah. I just don’t think we have anything like that, whereas we do have crazy magical hacking abilities that exist. Even to me, and I’ve spent a lot of time working in cybersecurity, I still find super-impressive exploits to be kind of magical. It’s just like, “Wait, what? Your computer system suddenly does this?” Or, suddenly you get access via this crazy channel that…

An example would be the iMessage zero-click vulnerability that the Israeli company NSO Group developed a few years ago where they… It’s so good. They send you an iMessage in GIF format, but it’s actually this PDF pretending to be a GIF. Then within the PDF there’s this… It uses this PDF parsing library that iMessage happens to have as one of the dependency libraries that nonetheless can run code. It does this pixel XOR operation and basically it just builds a JavaScript compiler using this extremely basic operation, and then uses that to bootstrap tons of code that ultimately roots your iPhone.

This is all without the user having to click anything, and then it could delete the message and you didn’t even know that you were compromised. This was a crazy amount of work, but they got it to work.

I read the Project Zero blog post and I can follow it, I kind of understand how it works, but still I’m like, “It’s kind of magic.”

We don’t really have that for persuasion. This is superhuman computer use. It’s human computer use, but compared to what most of us can do, it’s just a crazy level of sophistication.

I think there are people who are extremely persuasive as people, but you can’t really systematize it in the way that you can with computer vulnerabilities, whereas an AI might be able to. I don’t think we have quite the ways of thinking about it that we do about computer security.

Daniel Filan:

Yeah. I guess it depends what we’re thinking of as the threat, right? You could imagine trying to make sure that your AI, it only talks about certain topics with you, doesn’t… Maybe you try and make sure that it doesn’t say any weird religious stuff, maybe you try and make sure it doesn’t have compromising information on people… if you’re worried about weird hypnosis, I guess.

Jeffrey Ladish:

But I think we should be thinking through those things, but I don’t think we are, or I don’t know anyone who’s working on that; where I know people working on how to defend companies from superhuman AI hacking.

I think the AI control stuff includes that. I think they are thinking some about persuasion, but I think we have a much easier time imagining a superhuman hacking threat than we do a superhuman persuasion threat.

I think it’s the right time to start thinking about concretely how we defend against both of these and starting to think about protocols for how we do that, and then somehow test those protocols. I don’t know how we test them.

Daniel Filan:

Yeah, I guess it’s the kind of eval you don’t really want to run.

Jeffrey Ladish:

Yes. But I think it’s interesting that Eliezer Yudkowsky was talking about this kind of persuasion threat, AI box experiment, back a decade ago or something. I think that was right. I think that was a smart thing to be thinking about then.

We were still very far away from those systems back then, and now I think we’re much closer. Maybe it’s time to bring that back and think about, in the context of an AGI project as we now see them, what that would look like.

Daniel Filan:

Yeah. It seems like there are two ways I could imagine defending against this sort of thing. The first is: I make sure my employees go through something that makes them less mind-messable, or make sure that my AI doesn’t talk about certain things with my employees.

Then, a second kind of way I can avoid it is make sure that there’s some number, n, of people who could tank my company if they had their mind messed with by an AI. I want to just make that number as big as possible. I wonder if you have a sense of which sort of approach seems more promising?

Jeffrey Ladish:

Wait, I’m not sure I understood the latter one.

Daniel Filan:

The latter one is just make it so that a small number of people having their mind messed with by an AI is not a disaster for you.

Jeffrey Ladish:

Yeah. I think more the latter than the former. I think you probably try to do both. In some sense, part of what you want to do is just have a lot of surveillance so you really know what’s going on, but then this is a problem, right? Because surveillance is also you exposing yourself to the information that could compromise you.

This is not nearly as much of a problem in cyberspace. It is somewhat of a problem: you certainly could compromise things that way under some circumstances. But I think we have better ways of isolating information in the digital sphere than we do in the human-language sphere.

Maybe you do a lot of rephrasing things. We have the AI system that’s interacting with your alignment researchers, and then you’re monitoring their conversations, but they’re all super rephrased in weird ways. I don’t know.

I can imagine that you have multiple people that are rephrasing… This is kind of crazy, but I think it’s plausible we’ll see this kind of crazy thing.

Yeah, you have the person directly interacting with the AI system and then you have them talk to another person, and that person talks to another person, and then… The AI system is probably not so good that it can compromise the person and get that person to compromise another person and get that person to compromise another person, if they’re not all interacting with the AI system itself.

Daniel Filan:

Yeah. It does sound like an annoying way to get work done.

Jeffrey Ladish:

It sounds like a very difficult way to get work done, but this brings us back to the security thing, right? Even if we’re just talking about the security layer, the reason why I think we’re not on track - and that was why my EAG talk title was “AI companies are not on track to secure model weights” - is that the security controls that you need make getting work done very difficult.

I think they’re just quite costly in terms of annoyance. I think Dario made a joke that in the future it’s going to have your data center next to your nuclear power plant next to your bunker.

Daniel Filan:

Dario Amodei, CEO of Anthropic?

Jeffrey Ladish:

Yes. And well, the power thing is funny because there was recently I think Amazon built a data center right next to a nuclear power plant; we’re like two thirds of the way there. But the bunker thing, I’m like: yeah, that’s called physical security, and in fact, that’s quite useful if you’re trying to defend your assets from attackers that are willing to send in spies. Having a bunker could be quite useful. It doesn’t necessarily need to be a bunker, but something that’s physically isolated and has well-defined security parameters.

Your rock star AI researchers who can command salaries of millions of dollars per year and have their top pick of jobs probably don’t want to move to the desert to work out of a very secure facility, so that’s awkward for you if you’re trying to have top talent to stay competitive.

With startups in general, there’s a problem - I’m just using this as an analogy - for most startups they don’t prioritize security - rationally - because they’re more likely to fail by not finding product-market fit, or something like that, than they are from being hacked.

Startups do get hacked sometimes. Usually they don’t go under immediately. Usually they’re like, “We’re sorry. We got hacked. We’re going to mitigate it.” It definitely hurts them, but it’s not existential. Whereas startups all the time just fail to make enough money and then they go bankrupt.

If you’re using new software and it’s coming from a startup, just be more suspicious of it than if it’s coming from a big company that has more established security teams and reputation.

The reason I use this as an analogy is that I think that AI companies, while not necessarily startups, sort of have a similar problem… OpenAI’s had multiple security incidents. They had a case where people could see other people’s chat titles. Not the worst security breach ever, but still an embarrassing one.

[But] it doesn’t hurt their bottom line at all, right? I think that OpenAI could get their weights stolen. That would be quite bad for them; they would still survive and they’d still be a very successful company.

Daniel Filan:

OpenAI is some weights combined with a nice web interface, right? This is how I think of how OpenAI makes money.

Jeffrey Ladish:

Yeah. I think the phrase is “OpenAI is nothing without its people”. They have a lot of engineering talent. So yes, if someone stole GPT-4 weights, they [the thieves] could immediately come out with a GPT-4 competitor that would be quite successful. Though they’d probably have to do it not in the U.S., because I think the U.S. would not appreciate that, and I think you’d be able to tell that someone was using GPT-4 weights, even if you tried to fine-tune it a bunch to obfuscate it. I’m not super confident about that, but that’s my guess. Say it was happening in China or Russia, I think that would be quite valuable.

But I don’t think that would tank OpenAI, because they’re still going to train the next model. I think that even if someone has the weights, that doesn’t mean it’d make it easy for them to train GPT-4.5. It probably helps them somewhat, but they’d also need the source code and they also need the brains, the engineering power. Just the fact that it was so long after GPT-4 came out that we started to get around GPT-4-level competitors with Gemini and with Claude 3, I think says something about… It’s not that people didn’t have the compute - I think people did have the compute, as far as I can tell - it’s just that it just takes a lot of engineering work to make something that good. [So] I think [OpenAI] wouldn’t go under.

But I think this is bad for the world, right? Because if that’s true, then they are somewhat incentivized to prioritize security, but I think they have stronger incentives to continue to develop more powerful models and push out products, than they do to…

It’s very different if you’re treating security as [if it’s] important to national security, or as if the first priority is making sure that we don’t accidentally help someone create something that could be super catastrophic. Whereas I think that’s society’s priority, or all of our priority: we want the good things, but we won’t want the good things at the price of causing a huge catastrophe or killing everyone or something.

Daniel Filan:

My understanding is that there are some countries that can’t use Claude, like Iran and North Korea and… I don’t know if China and Russia are on that list. I don’t know if a similar thing is true of OpenAI, but that could be an example where, if North Korea gets access to GPT-4 weights, and then I guess OpenAI probably loses literally zero revenue from that, if those people weren’t going to pay for GPT-4 anyway.

The state of computer security

Daniel Filan:

In terms of thinking of things as a bigger social problem than just the foregone profit from OpenAI: one thing this reminds me of is: there are companies like Raytheon or Northrop Grumman, that make fancy weapons technology. Do they have notably better computer security?

Jeffrey Ladish:

I know they have pretty strict computer security requirements. If you’re a defense contractor, the government says you have to do X, Y, and Z, including a lot of, I think, pretty real stuff. I think it’s still quite difficult. We still see compromises in the defense industry. So one way I’d model this is… I don’t know if you had a Windows computer when you were a teenager.

Daniel Filan:

I did. Or, my family did, I didn’t have my own.

Jeffrey Ladish:

My family had a Windows computer too, or several, and they would get viruses, they would get weird malware and pop-ups and stuff like that. Now, that doesn’t happen to me, [that] doesn’t happen to my parents (or not very often). And I think that’s because operating systems have improved. Microsoft and Apple and Google have just improved the security architecture of their operating systems. They have enabled automatic updates by default. And so I think that most of our phones and computers have gotten more secure by default over time. And this is cool.

I think sometimes people are like, “Well, what’s the point even? Isn’t everything vulnerable?” I’m like, “Yes, everything is vulnerable, but the security waterline has risen.” Which is great. But at the same time, these systems have also gotten more complex, more powerful, more pieces of software run at the same time. You’re running a lot more applications on your computer than you were 15 years ago. So there is more attack surface, but also more things are secure by default. There’s more virtualization and isolation sandboxing. Your browser is a pretty powerful sandbox, which is quite useful.

Daniel Filan:

I guess there’s also a thing where: I feel like [for] more software, I’m not running it, I’m going to a website where someone else is running the software and showing me the results, right?

Jeffrey Ladish:

Yes. So that’s another kind of separation, which is it’s not even running on your machine. Some part of it is, there’s JavaScript running on your browser. And the JavaScript can do a lot: you can do inference on transformers in your browser. There’s a website you can go to-

Daniel Filan:

Really?

Jeffrey Ladish:

Yes. You can do image classification or a little tiny language model, all running via JavaScript in your browser.

Daniel Filan:

How big a transformer am I talking?

Jeffrey Ladish:

I don’t remember.

Daniel Filan:

Do you remember the website?

Jeffrey Ladish:

I can look it up for you. Not off the top of my head.

Daniel Filan:

We’ll put it in the description.

Jeffrey Ladish:

Yeah. But it was quite cool. I was like, “wow, this is great”.

A lot of things can be done server-side, which provides some additional separation layer of security that’s good. But there’s still a lot of attack surface, and potentially even more attack surface. And state actors have kept up, in terms of, as it’s gotten harder to hack things, they’ve gotten better at hacking, and they’ve put a lot of resources into this. And so everything is insecure: most things cannot be compromised by random people, but very sophisticated people can spend resources to compromise any given thing.

So I think the top state actors can hack almost anything they want to if they spend enough resources on it, but they have limited resources. So zero-days are very expensive to develop. For a Chrome zero-day it might be a million dollars or something. And if you use them, some percentage of the time you’ll be detected, and then Google will patch that vulnerability and then you don’t get to use it anymore. And so with the defense contractors, they probably have a lot of security that makes it expensive for state actors to compromise them, and they do sometimes, but they choose their targets. And it’s sort of this cat-mouse game that just continues. As we try to make some more secure software, people make some more sophisticated tools to compromise it, and then you just iterate through that.

Daniel Filan:

And I guess they also have the nice feature where the IP is not enough to make a missile, right?

Jeffrey Ladish:

Yes.

Daniel Filan:

You need factories, you need equipment, you need stuff. I guess that helps them.

Jeffrey Ladish:

Yeah. But I do think that a lot of military technology successfully gets hacked and exfiltrated, is my current sense. But this is hard… this brings us back to security epistemology. I feel like there’s some pressure sometimes when I talk to people, where I feel like my role is, I’m a security expert, I’m supposed to tell you how this works. And I’m like, I don’t really know. I know a bunch of things. I read a bunch of reports. I have worked in the industry. I’ve talked to a lot of people. But we don’t have super good information about a lot of this.

How many military targets do Chinese state actors successfully compromise? I don’t know. I have a whole list of “in 2023, here are all of the targets that we publicly know that Chinese state actors have successfully compromised”, and it’s 2 pages. So I can show you that list; I had a researcher compile it. But I’m like, man, I want to know more than that, and I want someone to walk me through “here’s Chinese state actors trying to compromise OpenAI, and here’s everything they try and how it works”. But obviously I don’t get to do that.

And so the RAND report is really interesting, because Sella and Dan and the rest of the team that worked on this, they went and interviewed top people across both the public and private sector, government people, people who work at AI companies. They knew a bunch of stuff, they have a bunch of expertise, but they’re like, we really want to get the best expertise we can, so we’re going to go talk to all of these people. I think what the report shows is the best you can do in terms of expert elicitation of what are their models of these things. And I think they’re right. But I’m like, man, this sucks that… I feel like for most people, the best they can do is just read the RAND report and that’s probably what’s true. But it sure would be nice if we had better ways to get feedback on this. You know what I mean?

Daniel Filan:

Yeah. I guess one nice thing about ransomware is that usually you know when it’s happened, because -

Jeffrey Ladish:

Absolutely, there’s a lot of incentive. So I think I feel much more calibrated about the capabilities of ransomware operators. If we’re talking about that level of actor, I think I actually know what I’m talking about. When it comes to state actors: well, I’ve never been a state actor. I’ve in theory defended against state actors, but you don’t always see what they do. And I find that frustrating because I would like to know. And when we talk about Security Level 5, which is “how do you defend against top state actors?”, I want to be able to tell someone “what would a lab need to be able to do? How much money would they have to spend?” Or, it’s not just money, it’s also “do you have the actual skill to know how to defend it?”

So someone was asking me this recently: how much money would it take to reach SL5? And it takes a lot of money, but it’s not a thing that money alone can buy. It’s necessary but not sufficient. In the same way where if you’re like, “how much money would it take for me to train a model that’s better than GPT-4?” And I’m like: a lot of money, and there’s no amount of money you could spend automatically to make that happen. You just actually have to hire the right people, and how do you know how to hire the right people? Money can’t buy that, unless you can buy OpenAI or something. But you can’t just make a company from scratch.

So I think that level of security is similar, where you actually have to get the right kind of people who have the right kind of expertise, in addition to spending the resources. The thing I said in my talk is that it’s kind of nice compared to the alignment problem because we don’t need to invent fundamentally a new field of science to do this. There are people who know, I think, how to do this, and there [are] existing techniques that work to secure stuff, and there’s R&D required, but it’s the kind of R&D we know how to do.

And so I think it’s very much an incentive problem, it’s very much a “how do we actually just put all these pieces together?” But it does seem like a tractable problem. Whereas AI alignment, I don’t know: maybe it’s tractable, maybe it’s not. We don’t even know if it’s a tractable problem.

Daniel Filan:

Yeah. I find the security epistemology thing really interesting. So at some level, ransomware attackers, you do know when they’ve hit because they show you a message being like “please send me 20 Bitcoin” or something.

Jeffrey Ladish:

Yeah. And you can’t access your files.

Daniel Filan:

And you can’t access your files. At some level, you stop being able to know that you’ve been hit necessarily. Do you have a sense for when do you start not knowing that you’ve been hit?

Jeffrey Ladish:

Yeah, that’s a good question. There’s different classes of vulnerabilities and exploits and there’s different levels of access someone can get in terms of hacking you. So for example, they could hack your Google account, in which case they can log into your Google account and maybe they don’t log you out, so you don’t… If they log you out, then you can tell. If they don’t log you out, but they’re accessing it from a different computer, maybe you get an email that’s like “someone else has logged into your Google account”, but maybe they delete that email, you don’t see it or whatever, or you miss it. So that’s one level of access.

Another level of access is they’re running a piece of malware on your machine, it doesn’t have administrator access, but it can see most things you do.

Another level of access is they have rooted your machine and basically it’s running at a permissions level that’s the highest permission level on your machine, and it can disable any security software you have and see everything you do. And if that’s compromised and they’re sophisticated, there’s basically nothing you can do at that point, even to detect it. Sorry, not nothing in principle. There are things in principle you could do, but it’s very hard. I mean it just has the maximum permissions that software on your computer can have.

Daniel Filan:

Whatever you can do to detect it, it can access that detection thing and stop it.

Jeffrey Ladish:

Yeah. I’m just trying to break it down from first principles. And people can compromise devices in that way. But oftentimes it’s noisy and if you are, I don’t know, messy with the Linux kernel, you might… How much QA testing did you do for your malware? Things might crash, things might mess up, that’s a way you can potentially detect things. If you’re trying to compromise lots of systems, you can see weird traffic between machines.

I’m not quite sure how to answer your question. There’s varying levels of sophistication in terms of malware and things attackers can do, there’s various ways to try to hide what you’re doing and there’s various ways to tell. It’s a cat and mouse game.

Daniel Filan:

It sounds like there might not be a simple answer. Maybe part of where I’m coming from is: if we’re thinking about these security levels, right -

Jeffrey Ladish:

Wait, actually I have an idea. So often someone’s like, “hey, my phone is doing a weird thing, or my computer is doing a weird thing: am I hacked?” I would love to be able to tell them yes or no, but can’t. What I can tell them is: well, you can look for malicious applications. Did you accidentally download something? Did you accidentally install something? If it’s a phone, if it’s a Pixel or an Apple phone, you can just factory reset your phone. In 99% of cases, if there was malware it’ll be totally gone, in part because [of] a thing you alluded to, which is that phones and modern computers have secure element chips that do firmware and boot verification, so that if you do factory reset them, this will work. Those things can be compromised in theory, it’s just extremely difficult and only state actors are going to be able to do it.

So if you factory-reset your phone, you’ll be fine. That doesn’t tell you whether you’re hacked or not though. Could you figure it out in principle? Yeah, you could go to a forensic lab and give them your phone and they could search through all your files and do the reverse-engineering necessary to figure it out. If someone was a journalist, or someone who had recently been traveling in China and was an AI researcher, then I might be like, yes, let’s call up a forensic lab and get your phone there and do the thing. But there’s no simple thing that I can do to figure it out.