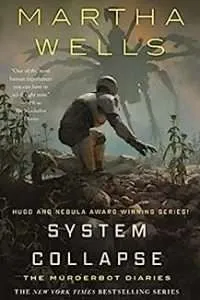

TL;DR: The new Murderbot book, System Collapse, is very, very good and if you were a fan of the other books in the series you’ll likely enjoy this one just as much.

A small piece of framework for this review to better understand the praise that is about to erupt: I have been having a lot of trouble reading text.

This has been happening intermittently for a few months now, but has been much more acute since early October. No need for guesses as to why; I definitely know why.

I can listen, and audiobooks have been wonderful for me, but each time I try to read a book digitally or in print, I have a wretched time sinking into the story the way I usually do. I can submerge myself into an audiobook and it’s blissful, but the minute I try to read a book with my eyes, I bounce right off like it’s tucked inside a hard shell and I can’t crack the coating. (The pool cover is on! It’s not fair!)

This issue is particularly frustrating because I receive a lot of ARCs in digital galley form, and few audiobooks are available in advance of release date for a host of reasons – and to be clear, I am not complaining about having ARCs, or about the production time of an audiobook.

I’m complaining that my brain currently doesn’t like using my eyeballs to read and is refusing the input of text in any visual format. It’s unspeakably annoying.

On top of Brain Says No To Text Inputs, I’ve had a tiresome amount of insomnia where my brain cannot calm down. Last weekend, when I could not turn my brain off, I reached for the Murderbot series, and started System Collapse.

It was a good thing it was the night we changed the clocks back because not only did I deep-dive into the text, but I stayed up embarrassingly late reading. I didn’t gulp the book down, either, but read at a somewhat leisurely pace, following the story and nearly tearing up with relief that I was reading. I went to sleep, but kept reading, and finished the book the following day. I read, slept, had coffee, had lunch, and read. That has not happened in a dreadfully long time.

So when I say that this book broke not only a reading slump but a reading block, that’s what I mean. I’m far too tired to load the squee cannon with anything but cotton balls, so trust me when I say, squee incoming. A tired squee, but squee nonetheless.

So, anyway, a review of the book.

System Collapse starts shortly after the end of Network Effect, with Murderbot and ART, along with humans from Preservation and from the University of Mihira and New Tideland, trying to figure out how to help several colonies of humans who have been abandoned and are suffering from alien remnant contamination. In addition to Murderbot, and ART, there’s also a…

Show Spoiler

second rogue SecUnit, Three, who has a very small role in this book, but I imagine is going to inspire reams of fanfic.

When Murderbot and some of the humans learn that there’s a possible third group of colonists who have also been abandoned and refused contact with the other groups for forty years, they try to find and help them.

Show Spoiler

Part of ART’s crew’s activities under the guise of university research include forging contracts and charters for colonies and planets abandoned by corporations in order to help those colonists avoid being conscripted into unpaid labor for other corporations. The addition of a second or third group of colonists adds impossible complications to their plans to forge charters to help them all.

Adding to the complexity of the situation is Murderbot’s redacted commentary in the first third or so of the book. Murderbot, as usual, narrates the story from the first person, and parts are redacted, specifically parts about an event in the recent past and the resulting emotions and anxiety.

The redacted portions are later explained, but in the initial chapters, I wondered who exactly Murderbot was hiding information from: itself or us? Removing parts of its narrative or hiding them from itself doesn’t aid the situation inside Murderbot’s head. That situation is not great, and Murderbot has to keep going while inside its mental health and emotional stability are collapsing. Whenever someone asks how Murderbot is, the reply is “I’m fine. I’m fine, everything’s fine.”

Murderbot’s redacted account is one of many examples of how the story gently unpacks how our identity and our connection to others are found in the stories we tell one another and how honest our motivations are as we tell those stories. The themes from the prior books also continue to evolve and take on additional meaning, especially the importance of being recognized as a person as opposed to a tool or as an asset to be leveraged and exploited.

I think one reason this book was so easy for me to engage with is that it starts and then it GOES. Ever been on one of those roller coasters where you get in the little cab and sit there with your restraint on, and the folks there do a little safety briefing, check the restraints, and give some hand signals and everything is just chill, but then immediately out of the gate you drop into terrifying speeds and just GO, like the stillness before never was? This book is like that.

Murderbot is dealing with immediate problems and past problems, and the immediate ones are similar enough to the past ones that they exacerbate one another. It’s a bit of an adrenaline read. Everything that’s happening around Murderbot (and everything is indeed happening so freaking much) is heightening the chaos and instability inside Murderbot, and both sets of problems keep building speed. Murderbot is also trying really, really hard to not need help, and to not accept help, to be an island unto itself because surely that would hurt less. And of course, that is not working because that’s not how beings work: your system of one will collapse without the support of others.

It’s also interesting to me that in Murderbot’s world, there is lots and lots and I mean luxuriant, unimaginable amounts of mental health support. There are trauma protocols, and if the existing protocols don’t work, the humans will come up with newer, better ones, and there are whole med systems with mental health just built into the environment. It’s as if mental health is inextricably connected to physical health and should be treated as the same in importance and attention! Can you imagine?!

And yet, even with the ease of psychological and mental assistance, and the truly gobsmacking availability of mental healthcare in that world, the humans AND Murderbot are often just as resistant to using it! The more things change, the more they stay the same, I guess?

Show Spoiler

I also want to say that my absolute favorite part of this book was that the humans on the isolated splinter colony had their own media collection, which Murderbot and ART were rather excited about, and one of the programs is called

Cruel Romance Personage.

Cruel. Romance. Personage.

I cannot. I am unable to can, due to being entirely tickled by this title.

There had better be some fanart of the series title screen, is all I am saying here.

I could go on for another nine thousand words about all the ways this novel examines the elemental desire to tell a story, and the motivations behind selling a particular narrative. I’m still thinking about all the different ways that powerful storytelling and convincing arguments (or “argu-cussions”) are explored in this novel.

All of these people are dedicated to helping, to rescuing people in terrible circumstances, and I was both comforted and inspired by their determination to keep trying, even when things are decidedly Not Fine. My favorite parts of the past Murderbot books were all here: the sarcasm between the humans, Murderbot, ART, and other machine intelligences, and the absolute rush of the adventure plot coexisting alongside a thoughtful, nuanced examination of what it means to be a person, and how difficult it is to have emotions and feelings. The series as a whole and this book individually are about how important it is to recognize the autonomy of other beings, and for one’s own personhood to be recognized and acknowledged.

I’ll probably start over at book one, and read the series through again. I think this might be the seventh or eighth time? Either way, I have little to none objectivity about this series, and I loved this book.

Hey ho! Let’s go!

Hey ho! Let’s go!

Dear Martha Wells,

Dear Martha Wells,